In July 2021 Sarah Frank, a rising freshman at Brown University, uploaded a 56 second TikTok video and disrupted 4,600 scientific research projects, Sarah is very active on social media and has 59,000 followers on TikTok. The video was about side hustles specifically Prolific.co. Prolific offers an online tool to easily recruit and pay research participants and conduct what it calls “ethical and trustworthy” research. That video got 4.1 million views in the month after it was posted and sent tens of thousands of new users flooding to the Prolific platform. The result was that Prolific’s pool of researchers in the U.S. jumped from 40,000 to 80,000 almost overnight. The influx of female researchers in their early 20’s dramatically skewed the demographics of Prolific’s survey pool. Over 4,600 research projects were impacted and Prolific ended up refunding research sponsors.

The TikTok Video

Sarah Frank is very active on social media. She has posted hundreds of videos on TikTok, has an active website, an Amazon storefront, published two books, runs a college admissions advice site, and built a non-profit. Here is Sarah’s video about Prolific:

Prolific is an online service that allows researchers to quickly execute studies using Prolific’s pool of potential research subjects. Prolific has over 150,000 research participants. Researchers can select participants based on over 100 demographic screens. You can generate statistically relevant sample sizes for the entire U.S. or UK population. Survey participants can earn a minimum of $6.50/hour for each study they participate in, or even more.

Sarah’s video drove thousands of new participants to Prolific. In the span of two weeks, Prolific’s U.S. base jumped from 40,000 to over 70,000 participants. Lots of young women followed Sarah’s advice to earn easy money responding to surveys as a side gig.

The challenge was that the majority of these new research participants were like Sarah – early 20s, white, college students. This threw off the demographics researchers were looking for in a truly representative sample of the U.S. population. Here is what Prolific’s U.S. representative sample looked like before the TikTok video was published:

Her’s what it looked like two weeks after the video was published:

As noted by Tony Tran of the Future Society:

The result? Thousands of studies being conducted using Prolific have been disrupted. This is due to the fact that researchers behind the surveys rely on the data being a representative sample of the population. With thousands of girls in their late teens to early twenties taking surveys, the data is now skewed.

One member of the Stanford Behavioral Lab posted on the Prolific forum dissecting the trend saying, “We have noticed a huge leap in the number of participants on the platform in the US Pool, from 40k to 80k. Which is great, however, now a lot of our studies have a gender skew where maybe 85% of participants are women. Plus the age has been averaging around 21.”

Future Society

Prolific is used by a lot of academic researchers, like Ph.D. candidates. They had to refund fees for over 4,700 projects and institute several product changes to deal with the influence of millennial women flocking to their platform to earn a quick buck as a side gig.

How Crowdsourcing Has Changed Market Research

Market research, whether for academic or commercial purposes, is hard. Obtaining statistically significant results has always been a challenge. The 2016 presidential election of Donald Trump is an example of how even the most sophisticated market research organizations can miss the mark.

The Early Days

There are two major types of market research: Qualitative and Quantitative. Qualitative research deals with the feelings, attitudes, opinions, and thoughts of an individual to ascertain their underlying reasons for behavior. In other words, the research conducted to determine what people think or feel about the situation and what are factors influence their behaviors. Techniques include in-depth interviews and focus groups. Quantitative research is a research strategy that focuses on quantifying the collection and analysis of data. It is formed from a deductive approach where the emphasis is placed on the testing of theory, shaped by empiricist philosophies. Examples include surveys, telephone interviews, and crowdsourced market research like that offered by Prolific.co.

Crowdsourced market research can be traced back o the 1700s:

In 1567, King Philip II of Spain offered a reward to anyone who could devise a simple and practical method for precisely determining a ship’s longitude at sea. The reward was never claimed. Almost 150 years later, in 1714, the British government established the ‘Longitude rewards’ through an Act of Parliament, after scientists, including Giovanni Domenico Cassini, Christiaan Huygens, Edmond Halley, and Isaac Newton, had all tried and failed to come up with an answer The top prize of £20,000, worth over £2.5 million today, provided a significant incentive to prospective entrants. Ultimately, the Board of Longitude awarded a number of prizes for the development of various instruments, atlases and star charts. However, it was John Harrison, a clockmaker from Lincolnshire, who received the largest amount of prize money overall – over £23,000. What is, perhaps, most astonishing about Harrison’s 300-year-old sea clock design is that a test of a working version in 2015 demonstrated that it is “the most accurate mechanical clock with a pendulum swinging in free air”, keeping to within a second of real-time over a 100-day test, according to Guinness World Records.

Deloitte Enterprise crowdsourcing and the growing fragmentation of work

The Nielsen Ratings is one of the more popular examples of crowdsourced research. Starting in 1947 it released a weekly rating of popular radio shows. It expanded to TV show ratings in 1950. Originally The Audimeter (audience meter) was used from 1950 during the early days of television broadcasting. It attached to a television and recorded the channels viewed, onto a 16mm film cartridge that was mailed weekly to company headquarters in Evanston, Illinois. The Audimeter was supplemented with weekly viewer diaries. In 1971 the Storage Instantaneous Audimeter allowed electronically recorded program viewing history to be forwarded to Nielsen via a telephone line, making overnight ratings possible. The upgraded People Meter, introduced in 1987, records individual viewing habits of the home and transmits the data nightly to Nielsen through a telephone line. This system is designed to allow market researchers to study television viewing on a minute-to-minute basis, recording when viewers change channels or turn off their television.

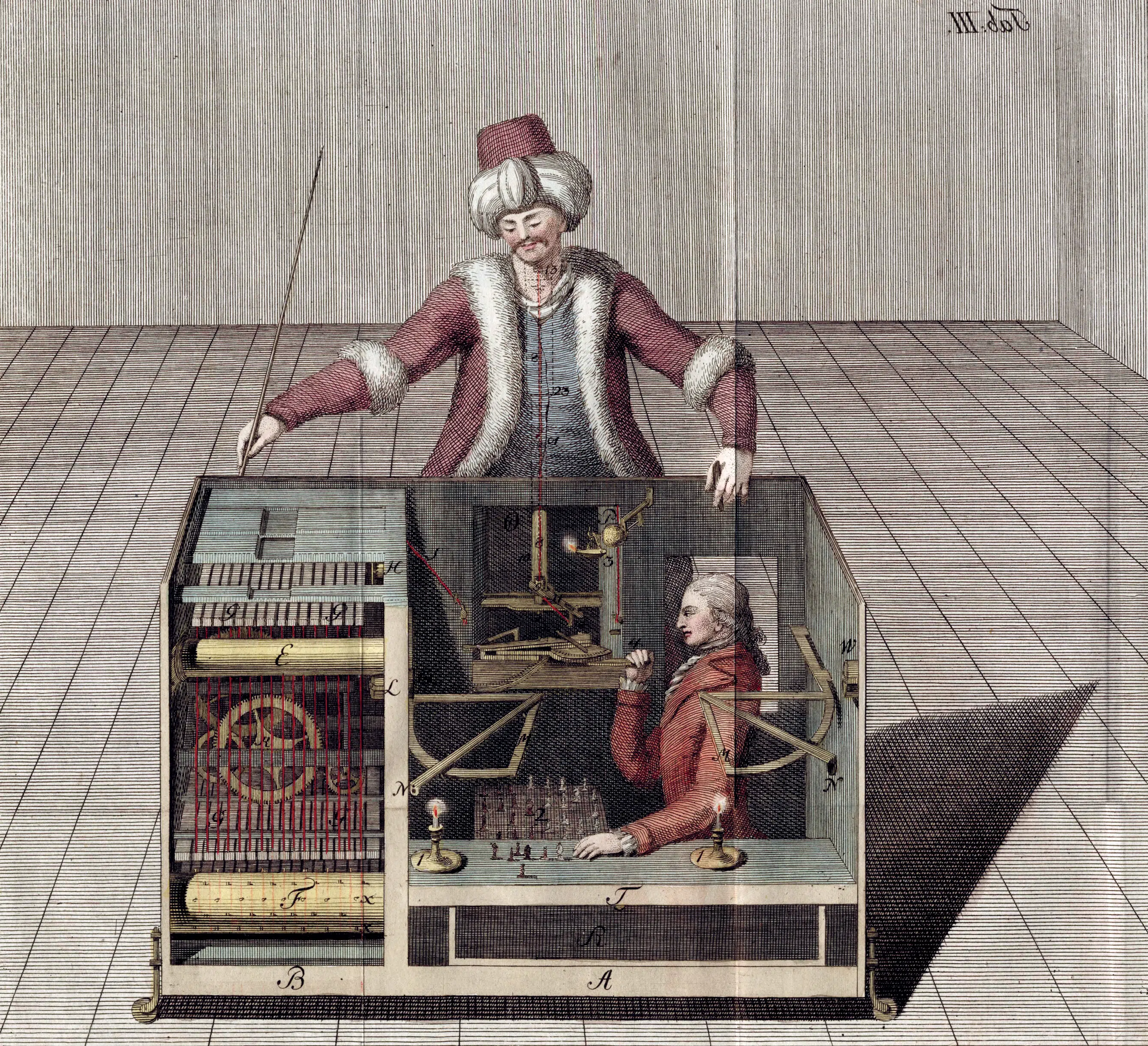

The Rise of Mechanical Turk

The Mechanical Turk was a fake chess-playing machine from the 1700s. It appeared to be able to play a strong game of chess against a human opponent. The Turk was a mechanical illusion that allowed a human chess master hiding inside to operate the machine. With a skilled operator, the Turk won most of the games played during its demonstrations around Europe and the Americas for nearly 84 years, playing and defeating many challengers.

In 2005 Amazon launched Mechanical Turk:

The Mechanical Turk website was the idea of Amazon chief executive Jeff Bezos, who believed a platform could be created to exploit the fact that humans can easily perform certain tasks that were difficult for computers. He predicted there was a business to be built around connecting those who wanted research done with those who were willing to do it. By creating the Mechanical Turk marketplace, Bezos tried to create a phenomenon he called “artificial intelligence.”

“Normally, a human makes a request of a computer, and the computer does the computation of the task,” Bezos told The New York Times in 2007. “But artificial intelligences like Mechanical Turk invert all that. The computer has a task that is easy for a human but extraordinarily hard for the computer. So instead of calling a computer service to perform the function, it calls a human.”

Pew Research

In the past 16 years, Mechanical Turk has become a popular tool for academic and corporate researchers. as a way to get tasks completed efficiently and inexpensively. Researchers post a task, known as a HIT (Human Intelligence Task) An analysis conducted by Pew Research in 2015 showed the breakdown of typical HITs:

Most HITs are microtasks. They can be completed in a few minutes. People can be paid anywhere from $0.01 to $1.00 a HIT. Many people spend a lot of time doing Mechanical Turk work, but it is not very lucrative. According to Pew Research:

According to Leib Litman, Ph.D.:

“Hundreds of academic papers are published each year using data collected through Mechanical Turk. Researchers have gravitated to Mechanical Turk primarily because it provides high quality data quickly and affordably. However, Mechanical Turk has strengths and weaknesses as a platform for data collection. While Mechanical Turk has revolutionized data collection, it is by no means a perfect platform. Some of the major strengths and limitations of MTurk are summarized below.

Strengths

A source of quick and affordable data

Thousands of participants are looking for tasks on Mechanical Turk throughout the day, and can take your task with the click of a button. You can run a 10 minute survey with 100 participants for $1 each, and have all your data within the hour.

Data is reliable

Researchers have examined data quality on MTurk and have found that by and large, data are reliable, with participants performing on tasks in ways similar to more traditional samples. There is a useful reputation mechanism on MTurk, in which researchers can approve or reject the performance of workers on a given study. The reputation of each worker is based on the number of times their work was approved or rejected. Many researchers use a standard practice that relies on only using data from workers who have a 95% approval rating, thereby further ensuring high-quality data collection.

Participant pool is more representative compared to traditional subject pools

Traditional subject pools used in social science research are often samples that are convenient for researchers to obtain, such as undergraduates at a local university. Mechanical Turk has been shown to be more diverse, with participants who are closer to the U.S. population in terms of gender, age, race, education, and employment.

Limitations

Small population

There are about 100,000 Mechanical Turk workers who participate in academic studies each year. In any one month about 25,000 unique Mechanical Turk workers participate in online studies. These 25,000 workers participate in close to 600,000 monthly assignments. The more active workers complete hundreds of studies each month. The natural consequence of a small worker population is that participants are continuously recycled across research labs. This creates a problem of ‘non-naivete’. Most participants on Mechanical Turk have been exposed to common experimental manipulations and this can affect their performance.

Although the effects of this exposure have not been fully examined, recent research indicates that this may be impacting effect sizes of experimental manipulations, comprising data quality and the effectiveness of experimental manipulations.

Diversity

Although Mechanical Turk workers are significantly more diverse than the undergraduate subject pool, the Mechanical Turk population is significantly less diverse than the general US population. The population of MTurk workers is significantly less politically diverse, more highly educated, younger, and less religious compared to the US population. This can complicate the way that data can be interpreted to be reliable on a population level.

Limited selective recruitment

Mechanical Turk has basic mechanisms to selectively recruit workers who have already been profiled. To accomplish this goal Mechanical Turk conducts profiling HITs that are continuously available for workers. However, Mechanical Turk is structured in such a way that it is much more difficult to recruit people based on characteristics that have not been profiled. For this reason while rudimentary selective recruitment mechanisms exist there are significant limitations on the ability to recruit specific segments of workers.”

Strengths and Limitations of Mechanical Turk

The Dark Side of Mechanical Turk

While Amazon’s Mechanical Turk has been a boon for market researchers, it has become a sweatshop for many workers. Many people use Mechanical Turk and other crowdsourced work sites like FigureEight, Clickworker, Toluna, and Prolific as their primary source of income.

In many economically depressed areas of the country, Mechanical Turk is one of the only options available. There is a great article in The Atlantic, The Internet Is Enabling a New Kind of Poorly Paid Hell. For some Americans, sub-minimum-wage online tasks are the only work available The story of one worker, Erica, is told in detail:

I talked to one such woman, a 29-year-old named Erica, who performs tasks for Mechanical Turk from her home in southern Ohio. (Erica asked to use only her first name because, she says, she read on Reddit that speaking negatively about Amazon has led to account suspensions. Amazon did not reply to a request for comment about this alleged practice.) Erica spends 30 hours a week filling out personality questionnaires, answering surveys, and performing simple tasks that ask her, for example, to press the “z” key when a blue triangle pops up on her screen. In the last month, she’s made an average of $4 to $5 an hour, by her calculations. Some days, she’ll make $7 over the course of three to four hours.

Erica, who has a GED and an associate’s degree in nursing administration, says the work for Mechanical Turk is the only option in the economically struggling town where she lives. The only other work she was able to find was a 10-hour-a-week minimum-wage job training workers at a factory how to use computers. “Here, it’s kind of a dead zone. There’s not much work,” she told me. In the county where Erica lives, only about half of people 16 years or older are employed, compared to 58 percent for the rest of the country. One-quarter of people there earn below the poverty line.

One reason Erica, who has filled out more than 6,000 surveys on Mechanical Turk and has a high rating on the site, earns so little is that the work simply doesn’t pay very well. But there are other reasons she makes so little that have to do with the nature of the platform. On Mechanical Turk, where she spends most of her working hours, Erica looks out for “HITs,” as assignments are called (for “human intelligence task”), that “requesters” are hiring for online. The tasks that pay the best and take the least time get snapped up quickly by workers, so Erica must monitor the site closely, waiting to grab them. She doesn’t get paid for that time looking, or for the time she spends, say, getting a glass of water or going to the bathroom. Sometimes, she has to “return” tasks—which means sending them back to the requester, usually because the directions are unclear—after she’s already spent precious time on them.

But despite the problems with Mechanical Turk, when Erica and her partner, who works for a food company, have a big bill coming up, she’ll spend extra time in front of the computer. Workers can choose to get paid every day, rather than waiting for a paycheck for two weeks. “I do it because on certain weeks, I can have a guaranteed $20 to send out to pay our bills—when we are completely flat-out broke until the following pay period,” she told me. Recently, Erica says, Amazon’s payments system has been on the fritz, though, and she hasn’t been able to get the money as quickly. The inability to get that $20 or $30 is a big problem in her household—currently, it means she doesn’t have the money to buy food to make for dinner. “I guess I’ll have to improvise,” she wrote to me, in an email.

The Atlantic

How Product Managers Can Use Crowdsourced Market Research

Market Research is a core skill for product managers. Research is needed to properly frame market problems, understand customer personas, prioritize features, and conduct win-loss analysis. Crowdsourced market research is one of the tools product managers should have in their toolkits. There are several tools available for product managers:

- Amazon Mechanical Turk. Quickly develop and execute surveys for a wide variety of populations. Not necessarily tech-specific, but one of the fastest and least expensive options.

- Prolific. A Mechanical Turk competitor. Supposedly more ethically based. Similar limitations as Amazon.

- UserTesting.com. CX focused on crowdsourced research services. Considered to be the market leader. Expensive, lowest-priced tier is $20,000 annually.

- PlaybookUX. Competitor of UserTesting.com. Similar functionality, more extended analytics. Significantly less expensive — $99/month entry-level plan.

- Win-Loss Agency. A specialist consultancy that offers qualitative interview-based research for enterprise software firms on topics such as sales win/loss, persona validation, and pricing studies. (Full disclosure I am a board advisor to the Win-Loss Agency).

- Gehrson-Lehamn Group (GLG). GLG is a high-end research organization. GLG has over 900,000 ‘council members’ who are typically former executives and industry experts. Customers can schedule one-on-one interviews with pre-screened experts to address a wide variety of topics. GLG is expensive, many customers start with a $10K package. Council members can make over $100 for a one-hour teleconference. (Full disclosure – I have worked with GLG for over 10 years conducting briefings for investment bankers, VCs, private equity firms, and enterprise ISVs)

- GuidePoint. A GLG competitor. The same type of profile and costs.

Summary

The Internet has enabled a fundamental shift in market research. Crowdsourced services like Amazon Turk, Prolific.co, and GLG have transformed how qualitative and quantitative research is done. Researchers can spin up a study in minutes, have 100s of participants, and spend less than $150. While these services are great, they can be affected by many of the scourges of the Internet like the infamous Sarah Frank TikTok video.

Also published on Medium.