Why You Need To Do More Product Experiments (And How To Do Them Right)

Product experiments help product managers and their users get better results.

It’s that simple. You need to be running them.

In this blog, we’ll show you WHY and HOW.

Source: apptimize.com

Experimentation and A/B testing are bread and butter in marketing, but in product…it gets complicated.

While most marketers are familiar and well-equipped with code-free tools that allow them to run such experiments, product managers often think they would need to involve development resources each and every time they want to test something.

And in companies where dev resources are scarce, product experiments are often dropped altogether. That’s a bad mistake.

Product experiments CAN and WILL improve your customer activation rates, engagement levels, and ultimately user retention – so if you’re not doing them, you’re leaving money on the table.

In this blog, we’ll show how product managers can design and run product experiments – WITHOUT CODING – to enhance user experience in a data-driven way. Let’s get started!

Why Product Managers Need Product Experiments

Product roadmaps are intelligent guesses at best, and blind gambles at worst.

Source: researchgate.net

In order to offset the risks involved in shipping the wrong features and spending a lot of time and resources on something your users may not use, you can run product experiments to help you decide how to prioritize the different items on your roadmap.

But there are plenty of other reasons why you should run product experiments:

- Show you which designs, navigational controls, content, etc lead to better conversion or more user success

- Help you to understand WHY users behave in the way they do, enabling you to design better features in future

- Disprove false ideas about your users, their needs, what they value, and how they think

- Resolve arguments between people who “feel” too strongly that their instincts are correct…

Product experiments give you real data about how users behave in your app.

Instead of making assumptions, they allow product managers to learn whether customer success rates would improve:

- If Calls To Action were in different locations or in different colors

- If navigation options were in a different order

- If a checklist showed them how far they had progressed through a task

- If contextual tooltips popped up when it looked like they were struggling to understand how to progress

- If a different user journey more suited to their precise needs would be more valuable

If you’re new to this blog it may surprise you to learn that there are easy-to-use, easy-to-deploy, no-code tools that enable you to run in-app experiments of this sort.

If you’re a regular reader or a Userpilot user, it won’t surprise you at all – because that is one of Userpilot’s main features.

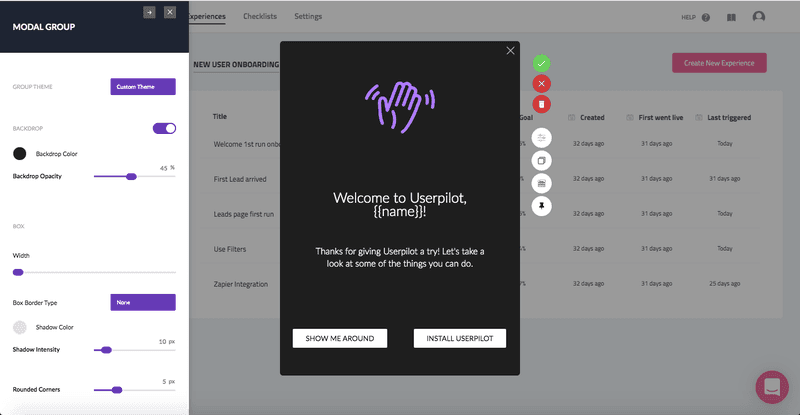

Source: userpilot.com

Goal Setting For Product Experiments

But wait.

If you want to extract actionable insights from user behavior, you have to put some thought into the tests you run.

We’ll dig a bit deeper into good experiment design in the final section of this blog, but at this point, I want to look at SETTING GOALS.

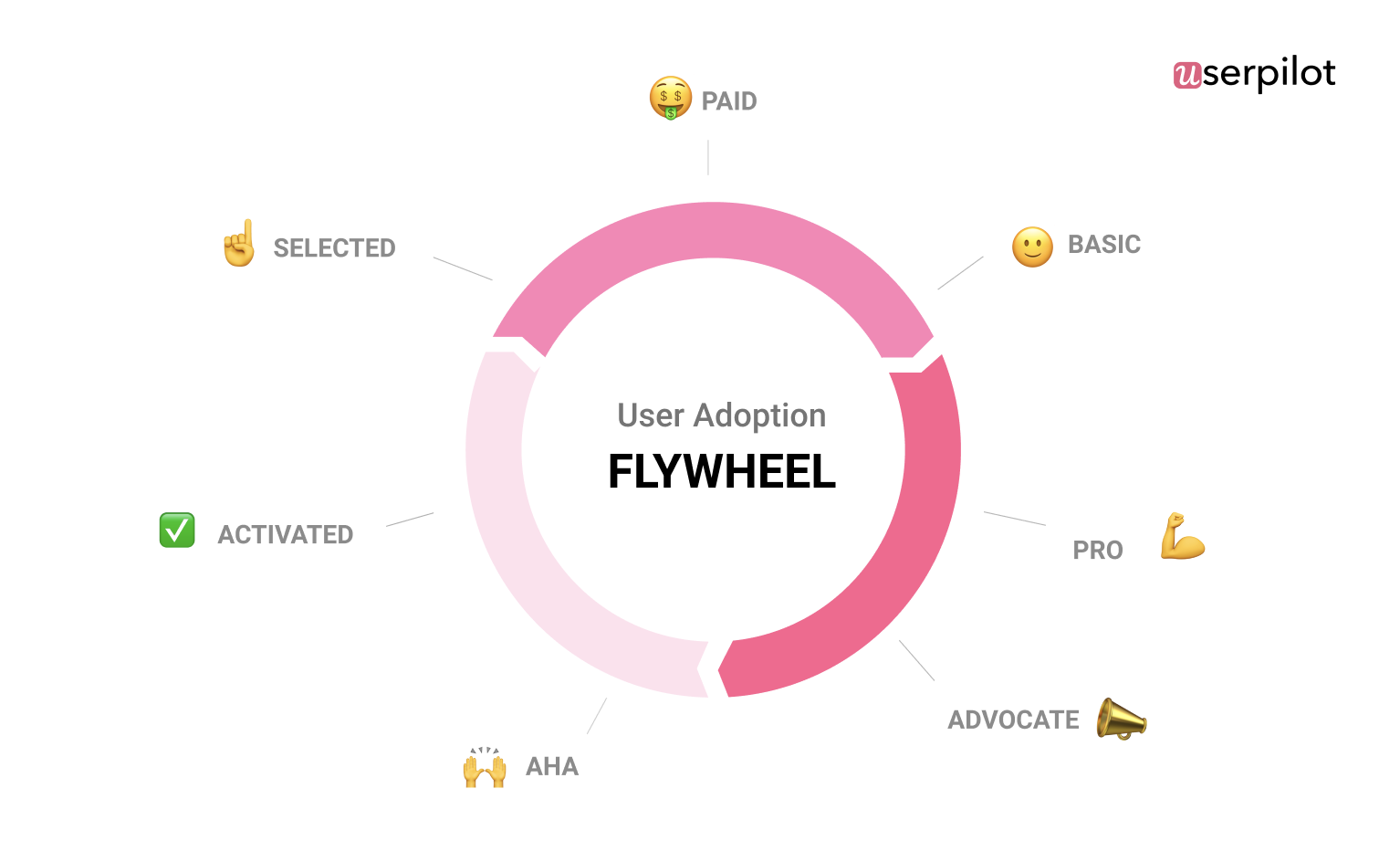

Source: userpilot.com

The best place to start is with your user journey. Here’s our adoption journey map, for example.

P.S. Want to learn more about adoption? Join our Product Adoption School:

As a product manager, your aim is to push more users along this journey, faster.

We’ve talked about this a lot in other blogs, but moving a user from Aha! to Activated, for example, is a matter of getting them:

- From a position where they can SEE the value, they’ll get from using your product

- To a position where they are EXPERIENCING that value for the first time

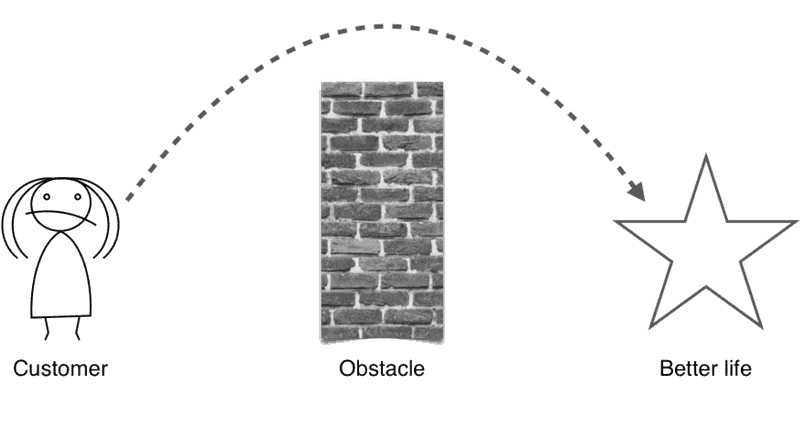

Source: justinjackson.ca

So a user at Aha! can see the Better Life on the other side of the Obstacle, but they have yet to climb over it…

For Userpilot, our Activation point is when a user installs our code snippet onto their website.

At that moment, they can start designing product experiences, and contextual in-app help using our visual interface – AND see their work in practice.

So for us, the Obstacle users need to overcome is anything and everything that stops them from deploying that snippet.

For example, a lot of Userpilot’s end-users do not have the technical knowledge or access rights to install the code. Hence, they need to outsource it to their developers.

Or, we could provide comprehensive help pages explaining how to do it yourself – including how to integrate data extracted from other apps using Segment.

Source: userpilot.com

Which option would get better results?

Product experiments mean you don’t have to guess or assume.

You can give the help to one set of users and not to another and SEE which group Activates more.

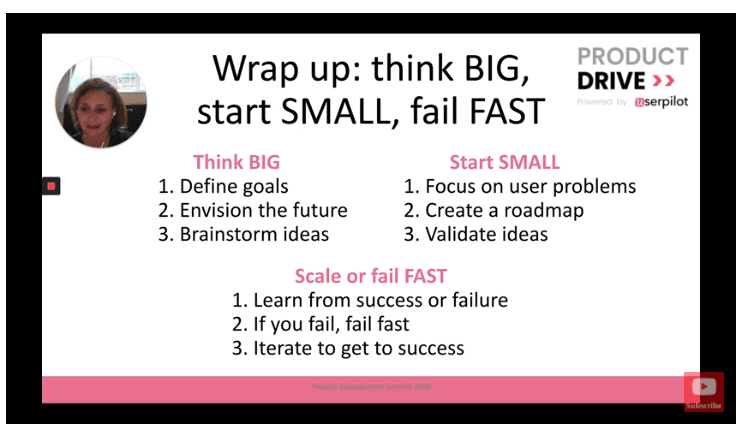

Souce: Alexandra Levich, Google

As Alexandra Levich of Google explains in this video, the best way to design product experiments is to Think Big:

- Define your goals by identifying an obstacle to user journey progress

- Envision the future by sketching out what things would have to be like for that obstacle to no longer exist (or at least to be less of a blocker)

- Brainstorm ideas for hypotheses to test. That is, changes to the product experience you could make that you think may improve user success

To sum it up: your product experiments should be aimed at reducing users’ pain points.

Product Experiments That Will Boost Your Key Metrics Without Coding

In this section, we’ll look at four different types of experiments you can discover what moves the needle on various different problems.

Boosting User Activation

Onboarding checklists are widely believed to make a big difference to Activation rates.

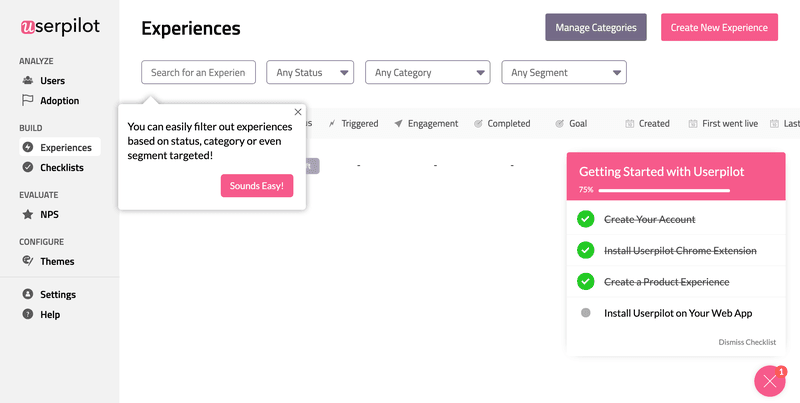

Source: userpilot.com

By showing a user all of the steps they need to go through to hit a key-value milestone – and how many are left to complete – checklists help users along their journey to Activation.

But what is the best way to use a checklist?

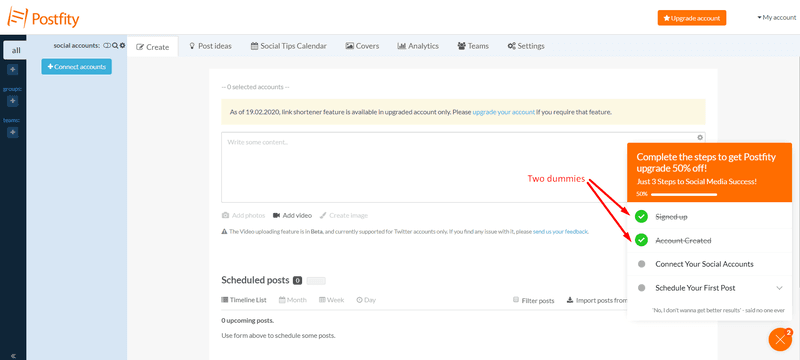

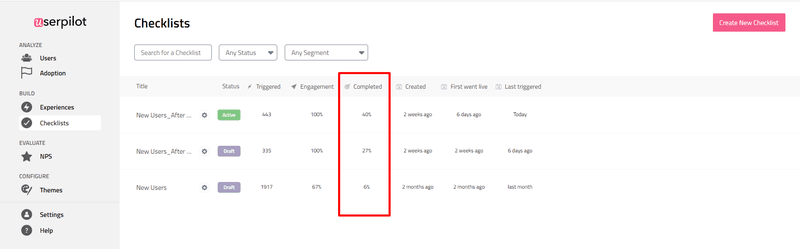

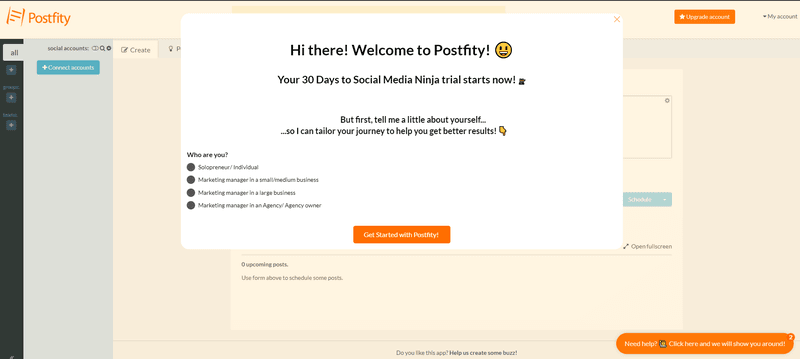

Social media scheduling tool Postfity used Userpilot to find out.

At the start, new Postfity users were presented with a checklist with none of the items marked as completed. This was giving them a 67% engagement rate but only a 6% completion rate.

Our Customer Success Team suggested trying this with some items on the list pre-ticked, so as to leverage the psychological bias most people show to completing tasks that they have already started.

So Postify added two alternate versions: one with one dummy item already ticked on the checklist; and one with two items.

Source: postfity.com

New users were shown one of the three checklists at random.

The results were striking.

Source: userpilot.com

The checklist with two items pre-ticked outperformed the other options in live tests – even though all the items were the same.

This can be attributed e.g. to the Zeigarnik effect.

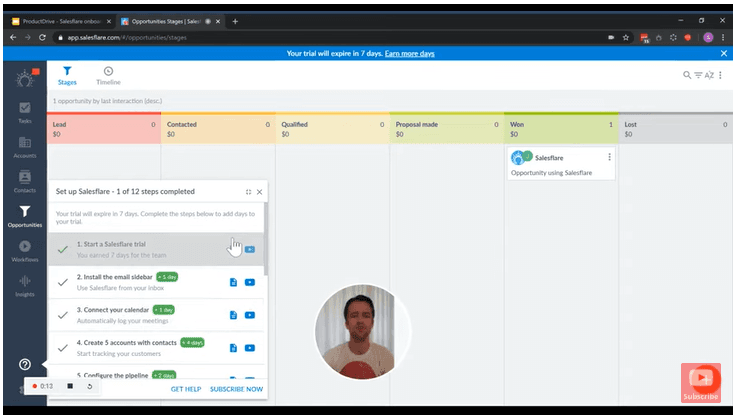

SalesFlare has experimented with gamification – providing incentives for completing onboarding tasks.

Source: Jeroen Corthout, Salesflare

As co-founder Jeroen Corthout explains in this video, his product needs new users to go through 12 steps before they start to see value (ie before Activation).

As a CRM tool, Salesflare shows the value the more you use it, the more your colleagues use it and the more you import your own data.

So, they ran an experiment to see if offering extra days onto users’ free trials in exchange for completing tasks helped Activation rates improve.

At the time of recording the video, Jeroen had collected the following insights:

Source: Jeroen Corthout, Salesflare

That is super-valuable intelligence that Salesflare can use to keep improving the user experience with new product experiments.

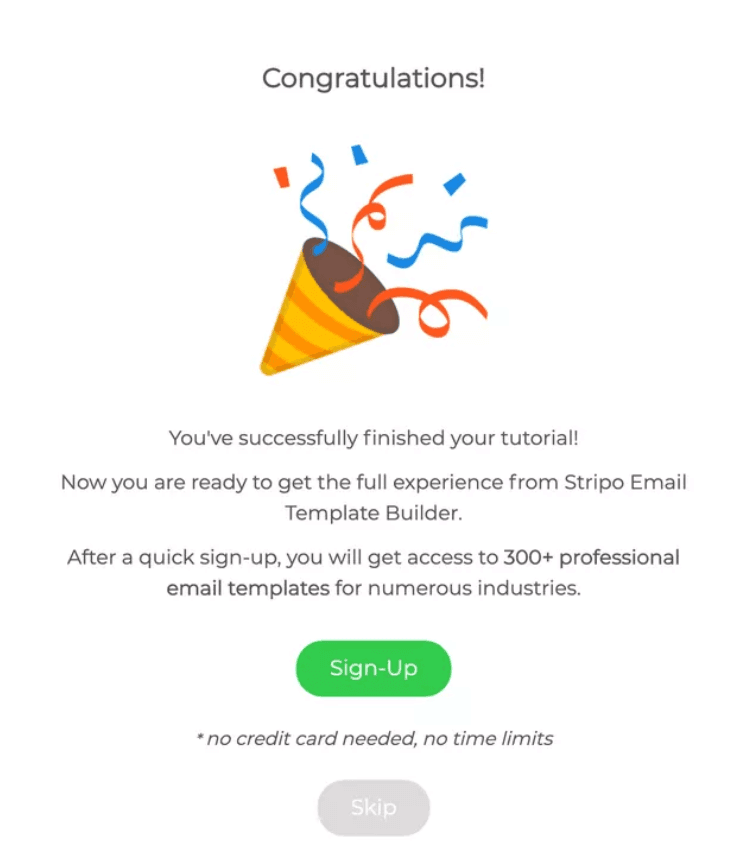

Gamification can be even simpler than this though.

When users reach Stripo’s Activation point, they see a little celebration image, like this:

Source: stripo.com

Another onboarding experiment would be to test the impact of a welcome screen.

Show your new users one of the following alternatives at random:

- No welcome screen at all – confront them with a dashboard and leave them to get on with it

- A welcome screen that provides some useful “getting started” information or invites them to take an interactive tour

Source: userpilot.com

- A welcome screen that asks the user for information about themselves, so that you can tailor their experience to their specific needs through segmentation

Our research found that only 60% of SaaS products use welcome screens. It may be the case that some tools don’t need one – their users prefer to just get on with it.

You can only tell what’s best for your users by running product experiments.

Boosting Feature Adoption

Perhaps you already know that users see more value, use your product more regularly and stay as paying customers for longer when they use more than one of the product’s features.

Promoting additional feature adoption is a challenge for many SaaS companies because they throw all their efforts into Activation – getting to the first value.

But if your onboarding ends there, you are missing out on the opportunity to move users further along the journey and to deepen your relationship with them.

The question is HOW best to promote those additional features.

Well, this is another area where product experiments can provide actionable insights.

In Userpilot, we let you incorporate Driven Actions into your experiences.

Source: userpilot.com

These are contextual “hints” that allow you to push users towards certain desired actions. For example:

- (Native) Tooltips – Information about certain options on-screen can be conveyed with pop-up or slide-in boxes. These can be present on load or perhaps triggered when a user shows intent by their mouse movements (eg hovering over a spot)

Source: userpilot.com

- Hotspots – These are a little bit more subtle. The button you want the user to click changes color or pulsates in some way that catches the user’s eye and makes them pay attention

Source: userpilot.com

Alternatively, you could promote feature engagement in one of these other ways:

- A secondary onboarding checklist. Once users have completed the basics, introduce them to a new set of tasks to complete.

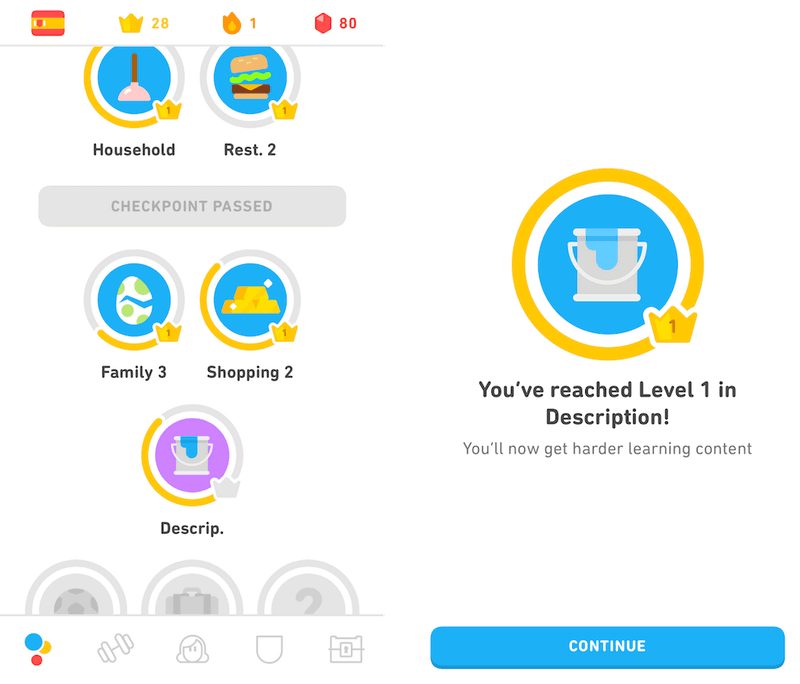

- Gamify further learning by including rewards and presenting it as a matter of “leveling up”. Duolingo uses this brilliantly to motivate users and promote further learning

Source: duolingo.com

- In-app marketing – Run webinars and other tailored help sessions to enable users to get more out of the product

Once again, there are any number of ideas that might encourage your users to adopt new features.

But you can only find out which are best when you experiment.

Improving User Retention

What if there was a way you could turn around churning users and reinstate them as happy customers?

Well, maybe there is…

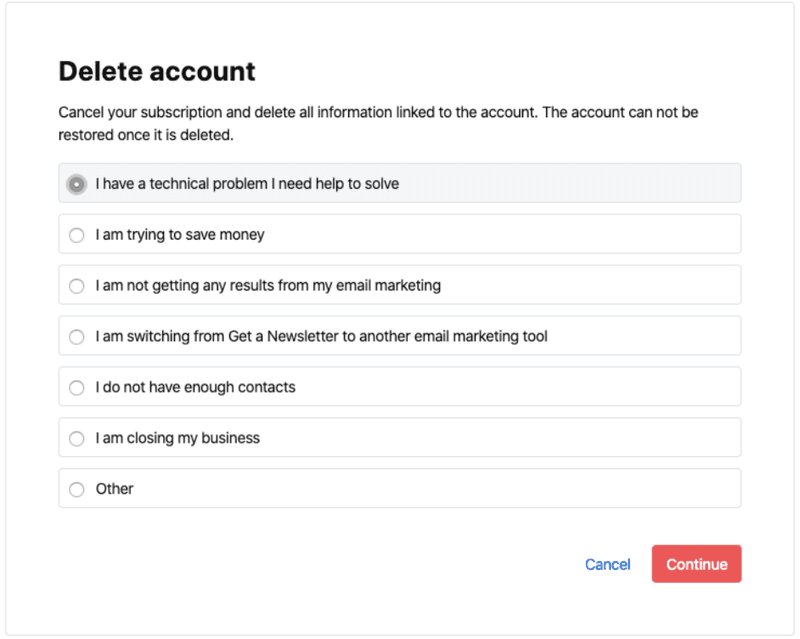

Many SaaS companies ask users to complete a survey when they cancel their subscriptions. Here’s one from Get A Newsletter:

Source: getanewsletter.com

There’s not much you can do if your customer is closing their business, but for almost every other option there may well be something you could do to improve matters.

You have two options here:

- Follow the feedback up and try to reverse the churn by offering to fix the problem

- Don’t follow it up with the outgoing user – but take their comments on board to improve the experience for all future users

Get A Newsletter opted for follow up feedback, like this:

Source: getanewsletter.com

Which works best?

There’s only one way to find out…

Show one option to one set of users, one to another, and the third to a third.

Then analyze the relative performance of each group against one core metric – in this case, churn.

Increasing Revenue

It’s also simple to experiment with upselling and cross-selling to your existing users.

One way is to impose usage limits on certain features.

Postfity, for example, allows users to schedule up to 10 posts on its free plan.

After that, users are directed to upgrade their subscriptions.

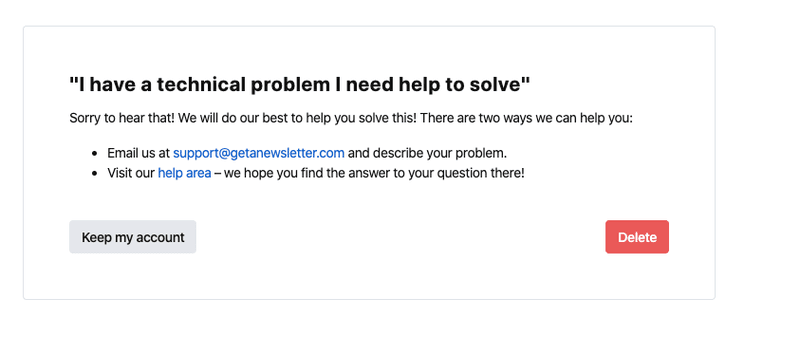

Many SaaS products display all of their available features on new users’ dashboards, but then restrict access to some of them to premium users.

Source: dropgalaxy.com

Alternatively, the upsell call to action can be triggered contextually. Here is one way that Hubspot does it:

Source: hubspot.com

Would a modal, a slide-out or a banner get better results?

Or perhaps you could show some users case studies or testimonials from happy customers, explaining how they achieved value with your service?

Again, the key is not to just try things out without a plan.

You need to rigorous, experimental approach to uncover insights you can depend on.

Source: userpilot.com

And that’s what we’re going to look at in our final section.

A Note On Running Product Experiments Correctly

It’s very easy to run BAD experiments, where the data you collect is worthless.

You probably know the basics of the scientific method, so I won’t go over basic concepts like Controls and Variants, statistical confidence, randomized samples, etc here.

Instead, here are some lessons I’ve learned from running product experiments myself and helping other people to do so with Userpilot:

- Design your experiments to test hypotheses. Don’t fit hypotheses into experiments you’ve already set up!

- Set a time limit and stick to it. Don’t end an experiment early because the results seem to be leaning one way. They could change!

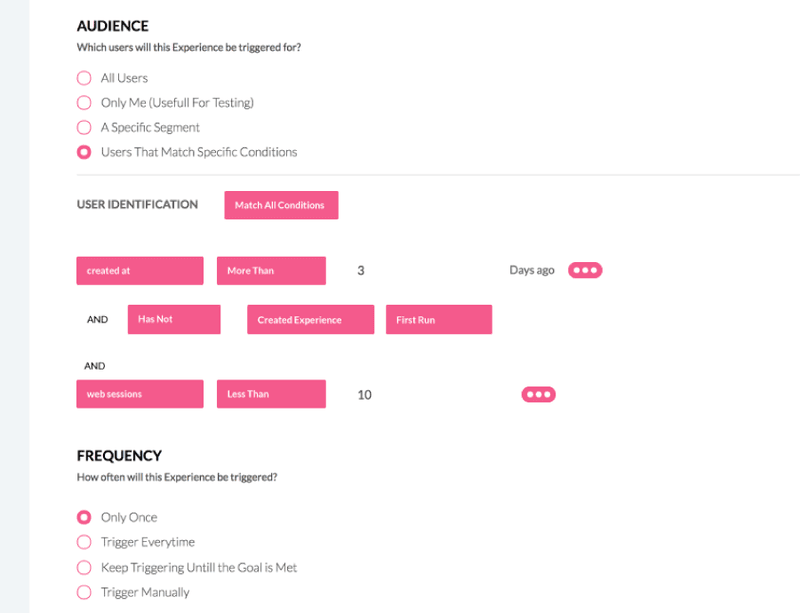

- Segment your audience properly. Don’t try out variants on regular users who are already familiar with your app. Restrict experiments to new users who have no preconceptions

- Even if you get a positive result, run the same test again to see if you get the same results. The more constant the results, the greater confidence you can have that the change you’ve made is causing the change in results

- Supplement your data with qualitative feedback, such as interviews, field studies, etc. This will help you understand WHY the changes you made led to better results

- Don’t just carry out single tests. Your experiment program should include multiple tests per KPI.

- Avoid “local maxima” problems. If your Variant and Control are very similar, the gains you achieve by switching may not be as great as if you had tested the Control against something else.

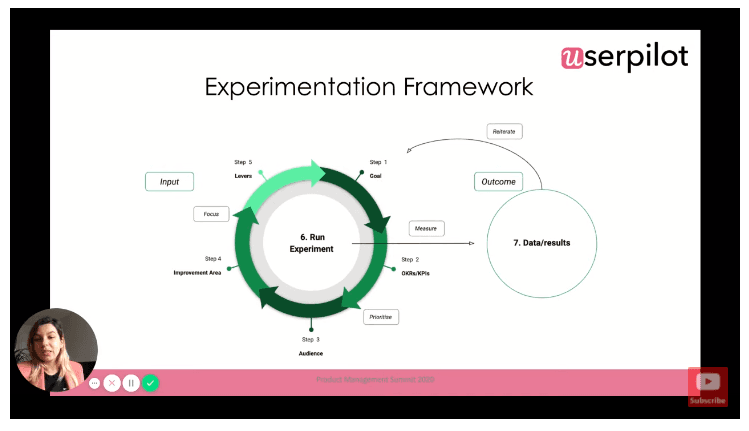

But as Alexandra Ciobotaru of Novorésumé explains in this video, the BEST results come from an integrated cycle of testing, improvement, and retesting.

Here’s a screenshot showing her Experimentation Framework, which she outlines in the video (15:08).

Source: Alexandra Ciobotaru, Novorésumé

How to set up A/B tests in-app with Userpilot?

Setting up A/B tests inside your product with Userpilot takes minutes and requires no coding:

- First, go to the ‘Features’ tab in Userpilot and tag the feature you want your users to use more:

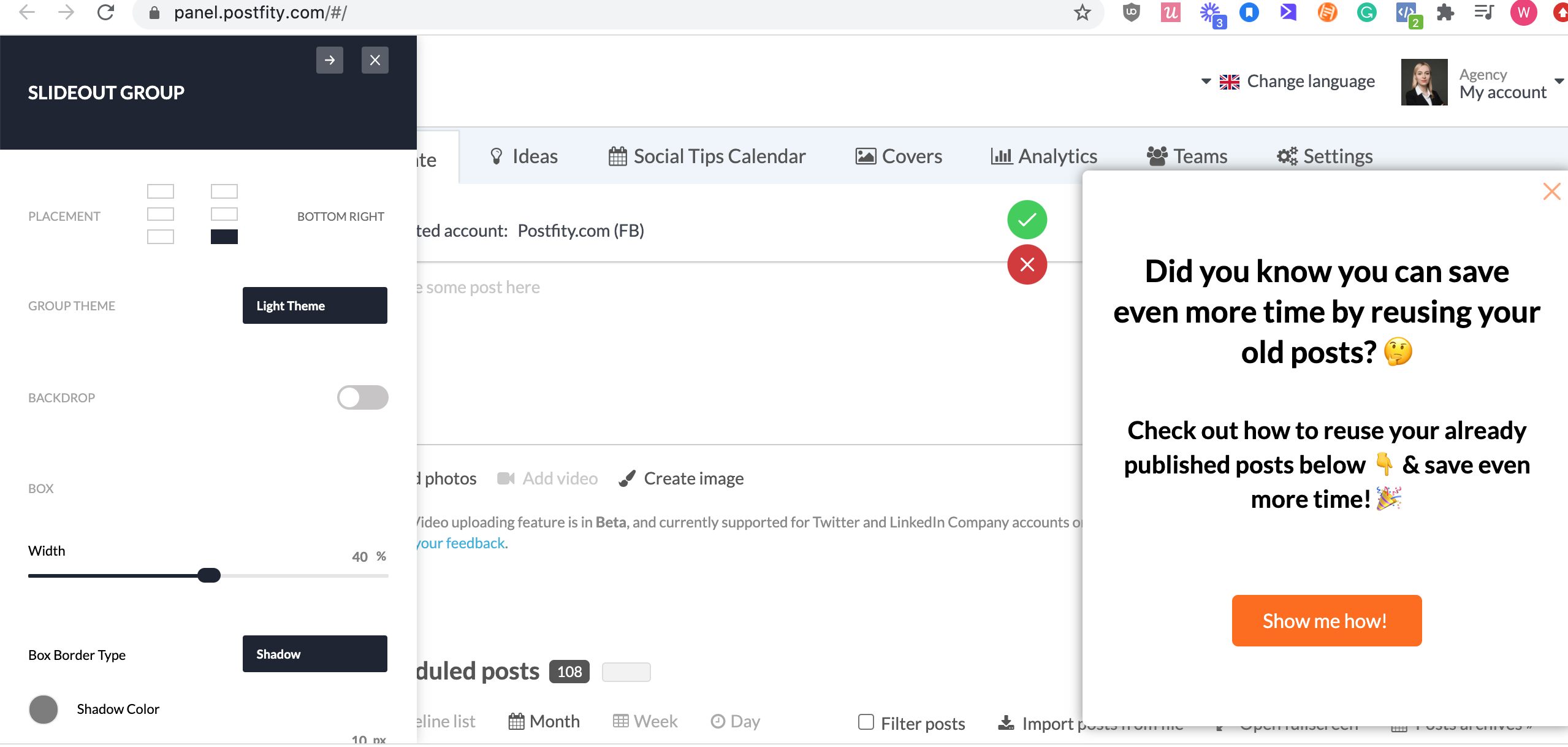

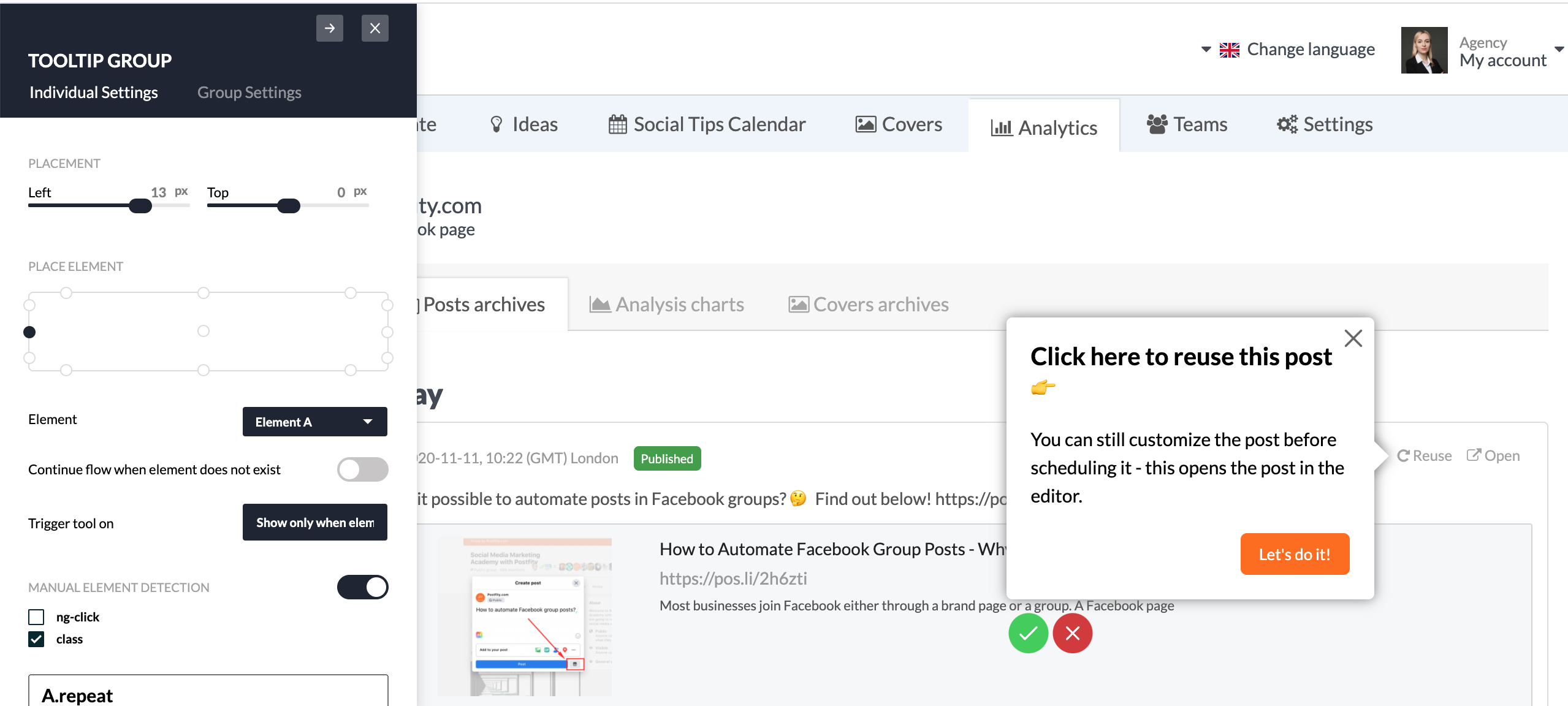

2) After tagging the feature, create an experience that will nudge the users to use it more:

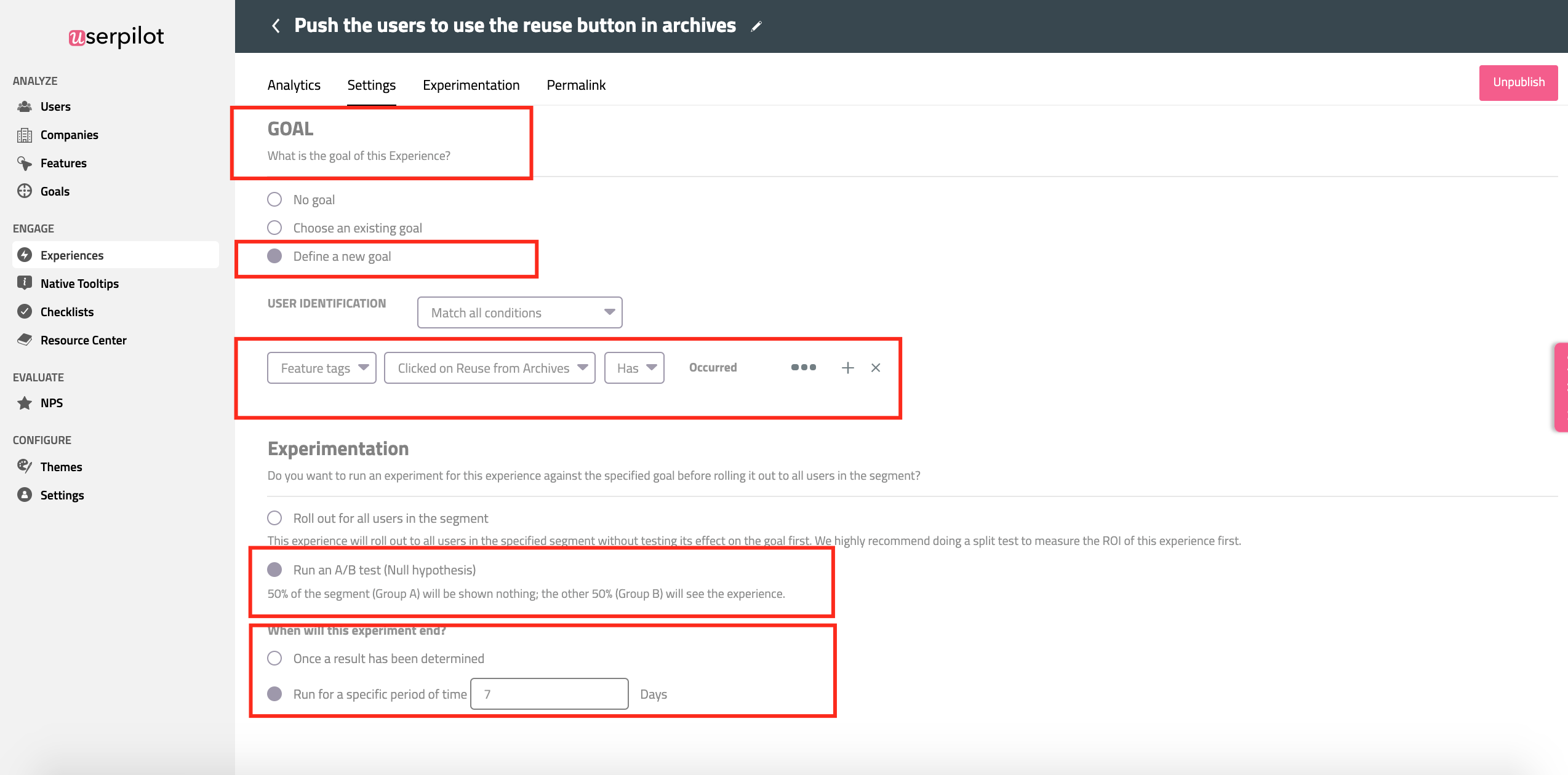

3) After finalizing the experience flow, go to settings, and define a goal for this experience:

Select ‘Feature Tags’ and choose the feature you tagged in step 1.

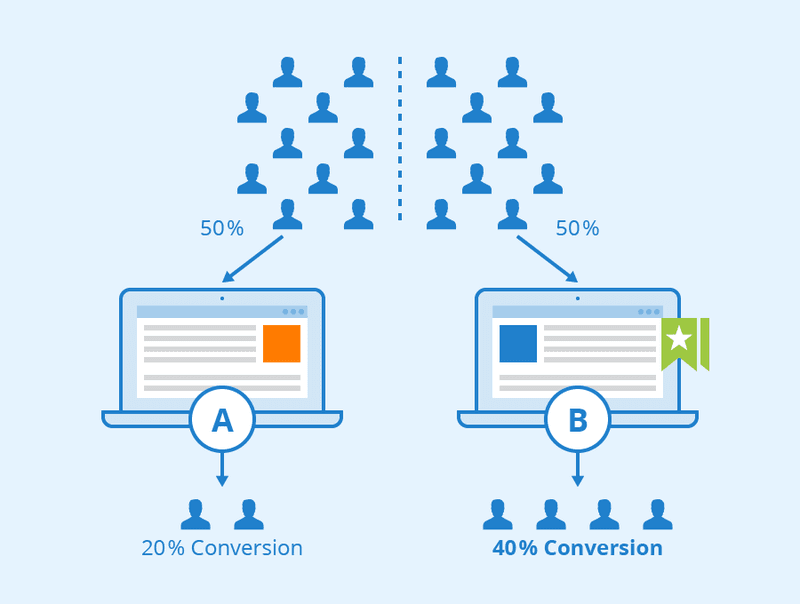

4) Then, select ‘Run A/B test (null hypothesis). This will show the experience to 50% of the audience you’ve chosen in your experience settings, and show nothing to the other 50% (assigned at random of course).

You can either run it as long as it takes to achieve a statistically significant result or for a specific period of time.

And that’s it!

A/B testing enables you to compare the results of both groups. Any difference will be due to the element you’ve changed as part of the product experiment.

This is a very simple way to see the ROI of the experiences you’re creating!

Building a culture of product experiments in your company will save you money and time because it pushes every decision to be data-driven.

If you can achieve that, you’re well on your way to great success.