Contents

Current generative AI tools can come up with videos and images within minutes. We still have to ask: are creative experts like designers and photographers ready to let go of experimenting in their creative work? Are they prepared to generate unpredictable visual assets based on prompts? Will image generation AI help them?

To make image and video generation tools more reliable and to increase creatives’ trust in them, we should take a critical look at the current user experience with these tools. In the following blog post, we’ll explore some of the issues and provide solutions for creating a better image-generation experience.

Generative AI’s visual generation capabilities are improving very fast. Long gone are the days when we could ridicule AI for rendering human hands. The best example of this is the official demos of Sora, the new AI video generation model from OpenAI. With its natural output, even AI hallucinations feel real somehow.

While the text-to-video generator will be publicly available only later this year, Hollywood executives have already started a discourse around Sora’s place in a high-end video production workflow. Does this mean a different type of generative AI experience can fully cater to the needs of specialists like cinematographers and visual artists?

If output quality is the only buy-in factor for tools like Sora, crucial angles of the user experience and industry requirements will be overlooked, lowering the chance of adoption. However, from a creative experience point of view, there’s still room for improvement in current image-generation tools.

Where image generation tools fail creatives

Current AI image-generation tools go against the creative process in a few aspects, especially in visual fields.

Loss of experimentation in the creative process

We all know how it works: you give a prompt to a generative AI tool, hit enter, and then get the visuals. It’s a pretty rough simplification, but most AI image generation tools work like this, whether it’s video or static images. Yet, traditionally, the creative process consists of an entirely different approach. Creativity is a complex set of progressive decisions and connected little experiments, especially in the visual field.

A detailed description of a finished painting before starting is possible. However, it may not be a precise interpretation of the finished painting. As the artist, you come up with new ideas, experiment, and commit to them without repainting the canvas over and over again while creating the painting.

Painting something from the ground up is both demanding and rewarding. However, I don’t want to neglect the biggest selling factor of AI image generation tools: ease of use. This allows people to create (somewhat) new art without the skills, dedication, or time. If concerns and problems around generative AI are addressed and managed, AI image generation could become a powerful alternative to stock photography. This could benefit people without the right creative skills very soon.

Words versus visuals

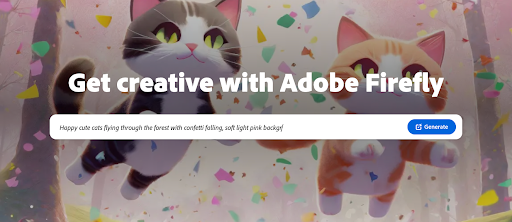

Prompts are great if you can describe what you imagine in detail, and if the AI model is in line with your creative needs. Yet basing the entire image generation process only on text feels disconnected and unrealistic to someone deeply involved with creativity, especially when it comes to visuals. As a designer, it feels weird that my articulation barrier and semantic abilities are the key factors in building a very visual output.

I’m far from saying bulk prompts are bad. I think they are a great way to produce a baseline. Still, currently, prompting is the only way to express your creative vision. Making visual decisions from progressively more descriptive text inputs might result in a diverted experience. You may find yourself settling for sub-desired results frequently.

No control over what to keep visually

Hitting the generate button every time you need to make minor adjustments to your generated image is wasteful in every aspect. It accumulates server-side processing waste, introduces a lot of waiting time, and also (re)generates the entire image from the ground up.

This issue makes creatives feel even less in control. Regenerating visuals can mean compromising the preferred visual composition and concepts because of minor adjustments to the visual composition. I often had to trash a lot of unique AI art because of hallucinations or small inconsistencies that the current tool workflow couldn’t correct.

The consecutive loops of incrementally improved prompts and image outputs can provide elements for professional use. However, production-ready art creation typically relies on traditional design tools like Photoshop.

AI’s superpowers should go beyond image generation

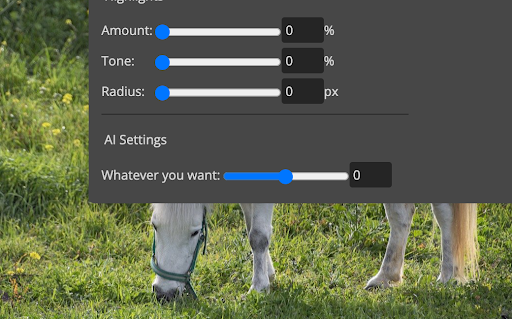

The cake’s baked when AI finishes generation: what you see is what you get. Without an extensive set of AI options to amplify professional needs when it comes to visual generation, your creative “collaboration” with AI stops as soon as you receive your generated materials.

In current image generation tools, a rendered AI image typically means very traditional editing of the entire scene, for example, cropping and basic color adjustments. It’s basically the same experience as hitting the edit button on a photo on your phone, without any AI in this post-processing phase to help you.

An interesting fix from a user perspective would be to open up your favorite editing tool. Then, lasso around specific objects and invent new parameters for settings. Highlight a horse in the composition. Then, type “fur length” and receive a traditional UI slider that influences only the fur of the highlighted horse. In the case of image generation AI models, this would introduce much-needed granularity in image iterations while reducing wasted effort.

With few exceptions, popular generative tools focus all the generative AI power into the entire scene. Still, I can imagine a more granular way to edit using AI, with small blocks of generative computing focusing on objects rather than scenes.

UX is more important than ever for AI products

As generative AI tools become more powerful, especially when it comes to image generation, they will gradually attract power users. Industry professionals will clearly open up more to AI tools and platforms in the upcoming months and years.

To maximize the potential of generative AI, AI product designers and managers need to build an experience. This experience should help visual professionals model their creative process. Designing a good user experience around the creative process will be critical. It will help these tools move from random experiments into reliable, trustworthy platforms that users can rely on.

Here are some ideas on how to make the shift.

Making generative AI more appealing to professionals

The lack of creative control over improvements and the unpredictability of AI-generated visuals can be a barrier to the trust experts build towards their tools. It can seem like AI works instead of you rather than working with you, and this dynamic is the only reason why users sense a lack of charge.

Through conceptual UX explorations, we identified some ways in which existing visual generation AI tools could be improved to achieve the mass appeal of industry professionals.

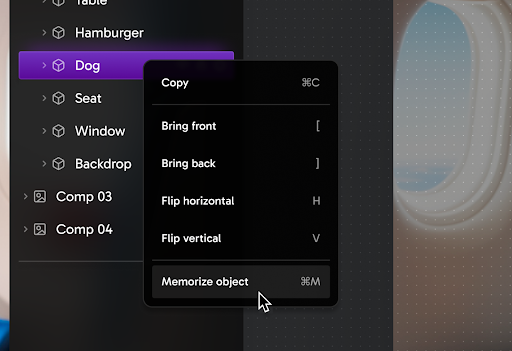

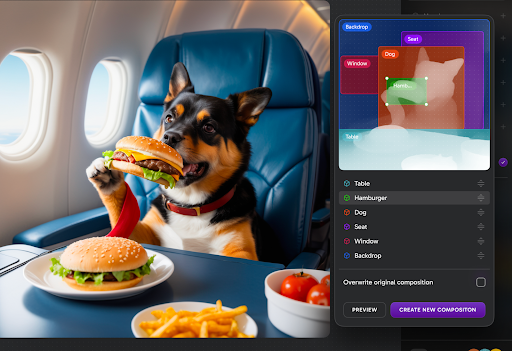

1. Bring back layers

Currently, your AI-generated composition is a flattened bitmap. If there is no separation between the objects, designers need to revert to traditional tools like Photoshop to reach production-level quality.

Layered comps also give users a quick and easy option for granular prompting, bringing non-disruptive, object-based tweaks into the workflow. A layer system is a simple, familiar, and powerful mechanism to support object-based AI editing against scene-level modifications. It also partly brings back control to creatives.

2. Set boundaries for prompts, literally and visually

Objects clipping, eyes that look weird, and text is nonsense. My frustration often turns into hate when an otherwise nice AI-generated composition fails. It might be on a small or sometimes even microscopic level. I know that revisiting the original prompt won’t help me. As mentioned earlier, you’ll ditch or overwrite your preferred composition by hyperfocusing the original text on the elements at fault.

Similarly to Adobe’s Generative Fill, an AI lasso tool is a logical merge of quick and powerful AI solutions. It addresses the creative need for potential local improvements. Highlight the points you dislike and instruct the AI to solve them. Again, this would give back more detailed supervision to creatives.

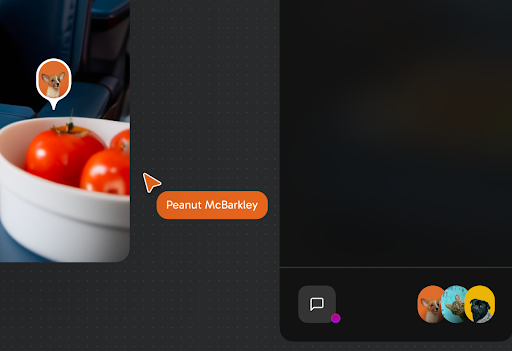

3. Reusable objects

A movie in which our main actor looks different in every shot is uncanny and confusing. Currently, AI is not bulletproof when it comes to consistent results.

In traditional design workflows, component, token, and class-based design allow us to be consistent, even when we create brand-new designs and layouts. We need generative AI to remember and reliably reuse elements, objects, faces, textures, and settings. This should occur in whatever context we desire.

An AI object repository can help designers create a system of visual elements. Creatives could declare a set of artifacts and variables or push changes as they would in a traditional design system. This allows professionals to produce assets for long-term, focused campaigns rather than creating one-offs all over again. It also ensures a consistent style that creatives can rely on.

4. Influence basic composition and photography

Certain visual terms in photography and cinematography, like blocking, are complicated to convey by text prompts alone. That’s why storyboarding is effective in early cinematographic phases. Visuals describe shots and scenes instead of words.

Playing around with the composition on a primitive level can give you a better idea of how realigning elements will influence the overall scene. It also helps understand the relationships between objects.

Moving objects based on simple composition controls can bring back much freedom in the creative process.

When photographers translate their abstract vision into a real-life studio environment, they talk about specifics: exposure, focal length, and light density. Providing photographic settings for compositions can bring more experimentation, fine-tuning, and confidence into AI workspaces. Especially when artists with traditional backgrounds use the tool.

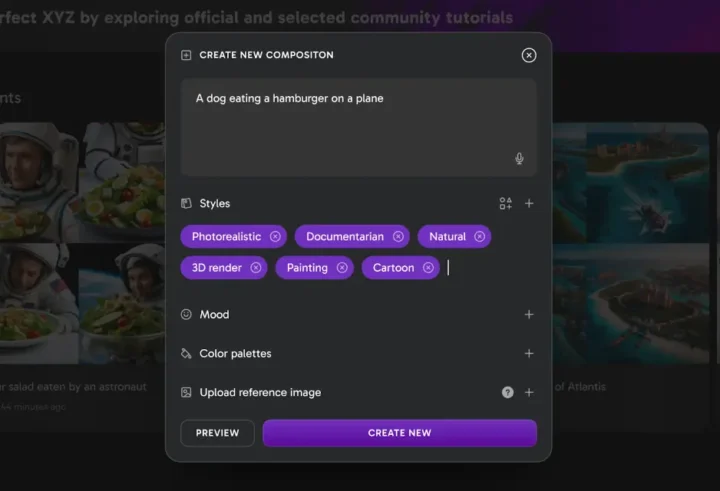

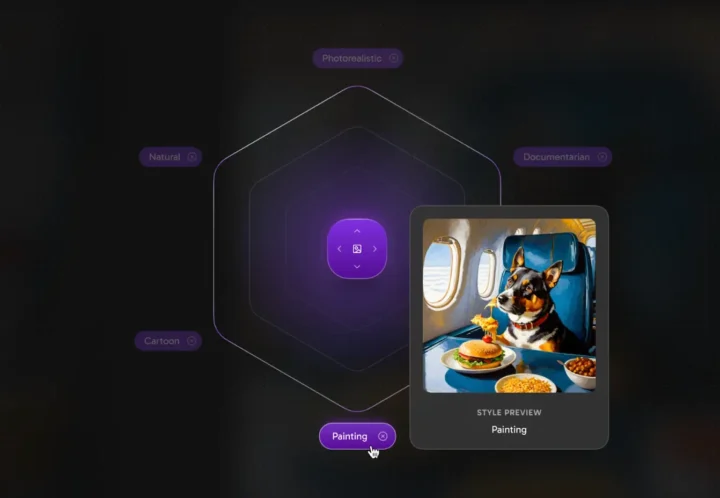

5. Playing around with visual styles

Creatives can have a coherent idea of what style they want to use. Still, there are instances where discovering that style still requires a lot of trial and error. Styles defined at a prompt-level guide but also limit the stylistic space for AI. This sets the look and feel of generated images into one visual direction.

Once artists have a basic concept of the feelings they want to evoke, they should enhance the basic prompt. This creates a compass where simple style navigation is possible.

Styles are represented as chips that the users can preview, add, remove, or arrange in a specific order. The output is represented as a drag-and-drop controller within the analogue style space represented as a radar. A quick, low-res preview provides a glance into the new style.

After the AI rendering, we should be able to influence the style while keeping the composition itself. A style radar can open up significant granularity and experimentation around the look and feel of the AI-generated image. This would also enable creatives to try different approaches, mirroring the actual creative process. Using the radar can also make style transfers an analog setting as well, so styles can be muted or loud based on preferences.

Make AI image generation a collaborative experience

While the professional design tools we use daily have been becoming more and more collaborative for years now, AI tools are not really focusing on this aspect. Highly functioning prompting can get difficult. You are left alone to compose these prompts and analyze the results coming in from AI instead of involving your team, peers, or even clients.

Inviting people into generative workspaces can become more substantial soon, as deciding what AI output aligns with specific goals is still a collaborative effort. The mechanics of collecting feedback for AI are not much different from presenting traditional artworks to our collaborators.

To summarize

Improvements in AI are faster than any technological progress in recent times. Still, the main phases of this advancement are fairly traditional (and traditionally flawed as well): Just figure out how to make things work in the backend and focus on the interface later. This is why AI output is getting significantly better while UX remains generally the same, focusing heavily on prompts.

Yet, as mentioned earlier, AI image-generation tools need to provide creatives with more control to become trustworthy instruments in their toolbox. To get there, it’s essential to mirror the creative process as much as possible, and this is where UX is key. Here, product designers must listen to and analyze the specific requirements of these experts to design a workable and manageable AI adaptation. Will the prompt-heavy workflow keep up the appeal? This question should be carefully inspected in terms of usability.

How we can help

At UX studio, we are ready to help you design AI products and interfaces that provide users with control and freedom to experiment. Ensuring early UX involvement in AI product development benefits those who rely on generative tools the most: experts.

Want to learn more?

Looking to further increase your UX skills and knowledge? Look no further! Our blog offers valuable insights and tips on UX design, research, and strategy that will help you take your skills to the next level.

Whether you’re a seasoned UX professional or just starting out, our blog has something for everyone. So why wait? Check out our blog today and start learning!