Contents

This guide will help you better understand the purpose of usability tests, when to do usability testing, and the various types of usability tests.

Usability tests differ from surveys and focus groups in that you actually watch people use your product live. This way, you get real-time feedback and firsthand data about your product’s usability.

In the words of Steve Krug, “(usable) means making sure that something works well: that a person of average ability and experience can use the thing (…) for its intended purpose without getting hopelessly frustrated.”

What is usability testing for?

Usability testing helps to validate product ease-of-use and functionality. It’s a method of experience research, and makes up the core of UX research. Usability tests are usually quantitative.

In web design, usability tests have a dual purpose: discovering usability issues and indicating what works. All sites have issues, big or small, invisible to people who know how it’s supposed to work.

Users don’t tolerate a bad experience. When their needs or expectations are not met, they’ll get upset or mad, and associate these emotions with your product or service. Research can identify these points of tension.

Usability testing examines the following areas:

- Usability (the ease of navigating your product)

- Accessibility (special needs not met)

- Performance issues (speed or reliability)

- Technical problems (compatibility and integration)

- Functionality gaps (missing or inadequate features)

- Support and documentation (or lack thereof)

- Value proposition (is it easy to understand?)

- Cost (return of investment, hidden costs)

- Security (privacy and data protection)

- Finally, you can test competitors’ solutions with the target group to gain insights on what could be changed or improved in your product.

You can do usability testing in-house for less complex problems, like debugging. When it comes to quantitative tests, you should rely on experts.

As a top ui/ux design agency, we’ve helped over 250 companies across various industries. We have experience with problems of all shapes and sizes, and have a great toolbox for solutions. If you feel like you need an objective perspective, contact us.

When to do usability testing

Continuous testing would be, of course, ideal, but it’s not always feasible. You definitely need usability testing in these 4 situations:

1. When you need to understand your user better

If you notice that you’re thinking in terms about abstract personas or ideal customers, take a step back. You may already have enough data on your existing users to get a precise picture. Usability testing helps you go beyond analytics. Instead of getting reports on a surge of purchases, for example, you’ll have details on what worked.

2. When you need to validate a feature, concept or product

Sometimes, it’s difficult to answer basic questions like “is this a good idea?” Usability testing helps you precisely assess user satisfaction and measure effectiveness. It gets you real and quantifiable user feedback on concepts, prototypes or existing solutions.

3. When you need to predict a decision

By seeing how users make choices, you can adjust processes to guide them more effectively towards desired actions, like completing a purchase or finding information. Testing shows what users prefer when it comes to design, layout, and functionality, so you can tailor the product to meet their preferences.

4. When you need constructive, direct recommendations

Usability testing gets you direct user input and targeted feedback. The researchers will highlight priorities, suggest best practices and help you make evidence-based decisions.

📌 Example: 82% of your users drop off on the checkout page of a food supplement webshop. Running a few usability tests focused on the checkout page can reveal the underlying reasons. It might turn out that the page asks for credit card information too early.

Usability testing is useful at various phases of the product design process.

- When forming a concept, you can test low-effort paper prototypes or competitors’ sites to define requirements and explore alternatives.

- At the beginning of a project, you can test the current design solution that you want to improve to avoid costly mistakes. This is the point to validate product-market fit, learn more about potential users, and refine the concepts.

- At the UX design or website redesign phase, you can get early validation to inform your decisions. You can build up a benchmarking process and save costs by implementing user-centered design early.

- During the development phase, it’s still not too late. Usability testing at this stage will create a feedback loop with potential users to help prioritize features and ensure consistency. These will reduce pesky post-launch issues.

Aim to have usability testing as a recurring activity at various stages of your product design process.

When You Shouldn’t Do Usability Testing

Usability testing won’t help you gain insights in case you would like to:

-

- …test your product design associations and visuals. It’s an inherently subjective field, so quantitative studies would be of little use. Consider an expert review instead, or try A/B testing with prototypes.

- …get quantitative data about product usage. Usability testing sessions are typically limited in duration, making it difficult to collect extensive quantitative data about long-term usage patterns and trends. Analytics tools will give you a better understanding.

- …identify preferences between different versions of visuals or copy. Usability testing focuses on the big picture. User surveys or focus groups are more than enough for smaller tasks like this.

- …validate desirability. Usability testing focuses on evaluating the ease of use, efficiency, and effectiveness of a digital product, rather than assessing its appeal. Brand perception studies or competitive analysis may be more useful.

- …estimate market demand and value. You should test your value proposition, conduct market research, or try pilot testing.

You can explore more methods and get actionable tips on our UX research blog.

How many usability tests you should do

For deeper results

What makes a representative sample size? Generally, at least 30 participants are needed for research–but not usability testing. Usability testing focuses on representative sampling for early problem detection and cost-effectiveness. According to the Nielsen Norman Group, testing five people in one cycle reveals 85% of all usability issues.

After a round, prioritize the problems and work on them, iterate, and test again. Repeated small tests will be more useful than one massive test, as products keep evolving and new issues arise all the time.

The best way to visualize it is the lean UX cycle: think, make, check.

By making usability testing a part of your process, you can guarantee continuous improvement and staying true to user-centered design principles. This sort of agility will make your product highly adaptable, so you can react fast to industry changes and emerging trends. Your competitive edge won’t get dull and you can be confident in lasting market success.

Keep in mind, however, that adopting a lean UX mindset may require a cultural shift within organizations accustomed to traditional waterfall or sequential design processes, and it has limited stakeholder involvement. Lean UX may also require dedicated resources for rapid iteration and testing cycles, including time, budget, and access to participants.

For faster results

Another popular framework, RITE (Rapid Iterative Testing and Evaluation), promotes even faster processes and solutions. It favors running one usability-test and correcting the discovered problems immediately afterward. Applying this framework allows you to test faster and more frequently.

As for its disadvantages, RITE is a resource intensive approach with limited depth. RITE may encourage a bias towards making incremental changes rather than considering more innovative or long-term design solutions, and makes you dependent on participants availability. A canceled call can halt your progress, so make sure to account for that.

For sustainable growth

For sustainable growth, use testing methods that not only address immediate usability issues but also support long-term optimization and continuous improvement.

Set yourself up to success by starting user testing early:

- Create benchmarking studies to establish a baseline of usability metrics and monitor changes over time.

- Do longitudinal studies to observe trends, patterns, and changes in user preferences that can inform your ongoing optimization efforts.

- Integrate analytics as soon as possible to gather data and make your researcher happy.

- Conduct periodic usability audits to assess the overall usability of the product.

- Have recurring, small tests. Steve Kurb suggests that a morning a month may be enough, as long as it becomes a habit. These short but regular tests should ideally involve at least three test participants.

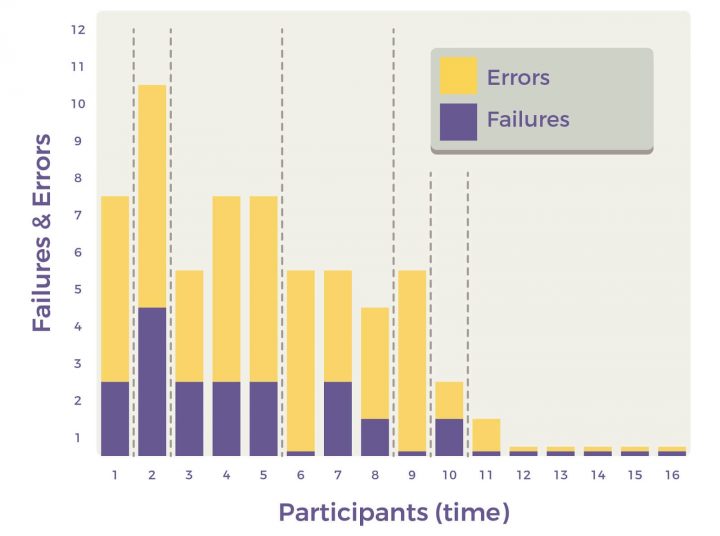

The image below illustrates the number of problems arising from the usability-tests over time. The vertical dashed lines signify the iterations. Six rapid iterations let you create an error-free prototype–so usability tests don’t need to be a major undertaking.

Types of usability testing

Usability testing comes in various forms to fit the kind of information you want to gather. Let’s review their pros and cons.

Remote or in-person usability testing

Remote

Remote usability tests are becoming more common. The benefits of remote usability testing according to Inge De Bleecker and Rebecca Okoroj are the following:

- Global reach: you can target any market independent of time tones.

- No travel: saves times and costs

- Less resource intensive: you don’t need lab equipment and a physical space

- Faster turnaround: calls are easier to schedule than in-person meetings

- Users have familiar equipment and environment: you get a more realistic picture of users interacting with your product.

However, issues arise with distribution: getting your product to the tester. Besides logistics, security concerns should be considered, especially when testing an unreleased product.

In-person

In-person testing is traditionally done in a controlled lab environment with specialized equipment, but a room or an office may also be suitable. Tests no longer require two-way mirrors, as observers can join online. Similarly, equipment costs decreased over the years. In most cases, all you need is a laptop and some software, for example, for eye-tracking.

For mobile testing, you may need software for mirroring, a camera recording the screen as well as the user’s hands, or a sled to keep the phone in a fixed position. Carol M. Barnum’s Usability Testing Essentials offers a detailed breakdown of all the equipment you may need.

Usability tests can also occur in the wild. Our research professionals did usability tests at football venues and tried to catch participants at the airports and on crowded streets.

Moderated or unmoderated usability testing

Moderated

During moderated sessions, a moderator (typically the researcher) asks specific questions and gives participants a set of tasks to perform. The moderator makes note of task success and error frequency/severity of various types.

The real-time interaction gives way to flexible adaptation. For example, if a test subject makes an interesting remark, the moderator can ask them to elaborate. Providing support to the participants puts them at ease, generally resulting in more honest and in-depth feedback and higher levels of collaboration.

However, the observer effect will slightly skew the results, and there’s more potential for bias. Scheduling is also more complicated than running an unmoderated test.

Unmoderated

Unmoderated usability tests typically involve screener questions, a task or survey, and follow-up questions. They are scalable, convenient, and cost-effective. The test subject has more autonomy and bias is reduced.

On the other hand, test subjects may easily get confused without opportunities for probing, clarification, and contextual understanding. Without direct oversight from researchers, quality control is not guaranteed, and the results may be superficial.Technical issues may also arise.

But standardization can result in limited insight, as context and nuances may get lost.

Quantitative or qualitative usability testing

Quantitative

This type of research is rigorously scientific. Researchers apply statistical analysis techniques to test hypotheses, identify patterns, and make data-driven decisions based on empirical evidence. The results are generalizable, making it a very efficient method that can save time and money.

Qualitative

Qualitative tests are designed to provide rich, detailed insights into user behaviors, preferences, and perceptions. This allows researchers to understand the “why” behind user actions.

Analyzing qualitative data from usability tests can be labor-intensive and time-consuming, requiring researchers to manually code, categorize, and interpret findings, which may introduce subjectivity to the analysis and lead to potential inconsistencies and variability in results.

Main takeaway

Researchers’ approach and methodology might change when working with an agency on a short-term project or as part of a product team in the longer run. They’ll start by adjusting the scale, rigidity, and frequency of your projects’ usability studies.

Whatever the method, usability testing will put you on the right track of giving users the functionality they want and can use. It will also help you avoid costly mid- and post-development changes. You can book a free consultation with us to learn what sort of research service may be best for you.

The four steps of the usability testing process

Step 1: Plan your study

Clarify details

To properly plan your usability study, you need to clarify the following:

- The product to test: Are you onboarding a mobile app, a website filtering system, or a kiosk interface prototype?

- The platform: When testing a mobile app, determine if the OS matters and if it might bias the study. If so, let participants choose the OS.

- The research objectives: Turn your high-level objectives into concrete usability testing questions. Can users understand passwordless registration and login? Can they easily navigate the product detail page? Concentrate on a few tasks, pages, areas, and assumptions to test at once.

- The research methodology: Base your decision on the type of product, project scope, objectives, and target audience. Choose the more feasible one (technically and financially).

- The target audience:

- Who are they?

- How many participants do you need?

- How are you going to reach them?

- What will you give as an inventive? (money, gift card, discount, tickets, lifetime access to your product, etc.)

- How do you make sure the participants are your target audience?

📌 Example: If you target people with high-demand jobs and salaries, either go to them or schedule a remote test. A neurosurgeon won’t have time to come to you. Always adapt to the participants lifestyle.

Prepare a study plan

Then write a usability study plan which includes the following:

- Goals

- Participants

- Setting (where, when, device type)

- Roles (moderator, observer, note-taker)

- Tasks

👉 Pro tip: Aim to have no more than two people with you during a usability test. Having too many people in the room can lead to frustration and intimidation.

Write a usability testing script

Testing can make participants feel uncomfortable if they think there are right and wrong answers. Make sure your script starts with the reassurance that there’s no way to fail this test, and you’re interested in their honest reactions.

Then inform users about the purpose of the usability test and how their feedback can help create powerful digital products. Adjust the usability test script according to participants’ prior knowledge. Some explanation is required on what a prototype is if they have never heard of the concept. You may also want to briefly introduce yourself and the company.

Include intro questions and mini-interviews. It builds rapport between you (the moderator) and the test participant. Moreover, it provides an opportunity to validate user personas. Estimate the time it will take and include it in the script. Make sure that the questions are adjustable to the level of the participants’ involvement. If they’re very forthcoming, you may need to strike some questions to keep on schedule, so you’ll need to know the priority of these questions.

Next, come up with a realistic scenario. You want to hear users’ personal reactions to the product. Help them engage in the testing. Come up with the exact usability testing questions and tasks you want your participants to perform. They should find the scenarios and tasks as realistic as possible.

📌 Example: “As a homeowner with a garden, you want to install an automated irrigation system, so you don’t have to schedule your daily routine around watering. You google the term with your city name and stumble upon this website.”

Now that you’re all prepped, let’s gather participants.

Step 2: Get user test participants

Challenges

Usability testing recruitment can be challenging. It can make you feel like a TV commercial trying to reach out and “sell” the usability testing to as many people as possible.

First, many people have never heard of UX research activity, and the whole thing might sound suspicious and cumbersome. Find what works best as a recruitment script – on the phone or in email – to evoke trust rather than suspicion or confusion.

Finding the right person

When deciding who to target, have a well-defined persona or a particular group of people in mind. The main rule of thumb would be to find someone who has not tried your application yet. For a more specialized product, you’ll need someone with a certain level of expertise.

Think about where to look for the kind of participants you want: what social media platforms or websites they use or where they meet offline. You can also use recruiting software. If you have no time to do usability testing yourself, you can ask a dedicated user experience design agency to conduct usability testing for you.

Part of finding the right test subjects is to double-check if they would be a good fit.

- First of all, check their availability and make sure they can commit.

- Make a screener to check if they fit your criteria and seem open to share their thoughts. You can have an online form or pose the screening questions directly over the phone.

- Set expectations about the length of the test, the basic task they will execute, and tell them if you’ll make a recording or have them sign an NDA

- Last but not least, detail the compensation they’ll receive for their time.

Once you have your pool of dream candidates, make sure to follow up with them.

👉 Pro tip: Always schedule extra user interviews as 10-20% of scheduled tests are canceled by participants.

Step 3: Lead usability tests

Pilot test

You’ll have to test the test itself. Take it for a dry run or do a pilot. The pilot participant doesn’t have to come from the target audience: run a session with a colleague or ask your friends. Then go through the entire thing like you’d do in real life. This will help you catch glitches in the prototype, make sure that your instructions are clear, and that you calculated your time right.

Use this checklist for a successful usability test:

- Have a quiet room booked for the session with a stable internet connection.

- Remind the user about the usability-test.

- Print the usability testing scripts.

- Set up the equipment and the recording. Double-check if everything works properly.

- Welcome the participant and (re-)introduce yourself.

- Summarize the procedure (“We’re going to test a food delivery application specializing in Asian food”).

- Emphasize that you are testing the app, not the participant: there are no wrong answers.

- Ask them to keep out loud and comment on any action they take.

- Remind the participant that you want to know how they would use the product without help, so you cannot assist them.

- Present the consent form and NDA if needed.

- Re-share some practical information and allow the participant to ask questions: “The test is going to take one hour.”, “If you would like to stop the test, please let me know.”, “Do you have any questions about the test? Can we start?”

During the test

Scenario

Make the scenario specific and realistic. Paint a vivid picture for participants so they can easily imagine the situation.

📌 Example: “Imagine you are a marketing manager at a company. Your task is to choose a newsletter service provider.”

Ask warm-up questions relevant to the test:

- Experience: How do they use similar products?

- Intentions: Why do they use those products?

- Needs: What are their typical issues? What are they looking for?

- Knowledge: What do they know about the topic?

Tasks

At UX studio, we always give participants tasks to focus their attention on some area or aspects of a user interface, whether it’s an overall impression of the landing page or the process of buying shoes on an e-commerce platform. There are three types of tasks:

- Broadly interpreted: These instructions show how users start using the application and figure out what it does. “Discover the ways you could use this application to enhance your performance.” “Please explore this landing page and see what this company offers.”

- Tasks related to a particular goal: These tasks aim to test specific processes. “Try to buy a TV.” “Sign up.”

- Tasks related to specific interface elements: “Where can you subscribe to a newsletter here?” “How can you put an ebook in the basket on this screen?”

Handy psychology tips

- Be non-judgemental.

- Don’t boss them around: always be polite.

- Don’t show reactions indicating whether participants do the “right thing.” Remain neutral.

- Make your users feel relaxed.

- Probe participants to speak their mind and think out loud.

- Pick up non-verbal cues (frowning, fidgeting, biting lips, etc.)

- Don’t be afraid of silence.

- Paraphrase what they said to double-check meaning (“So, you are saying you expected this button to take you to the checkout page, right?”)

- Ask back (“Will clicking this button pause or exit the game?” – “What do you think will it do?”)

Steve Krug’s “Things a Therapist Would Say” highlights many situations that often occur in usability testing, offering possible verbal reactions from the moderator’s side. In our experience, it regularly happens, so it merits preparing standard questions and responses to these situations.

After the test

Feeling like a guinea pig can frustrate participants. It might happen even if you try your best to emphasize they are not the ones being tested. Participants might remain tense until the very end of a session.

To help your participants relax, ask them how they felt and perceived their experience. A bit of post-test chit-chat indicates the “serious” part of the test has finished, and they can share their honest opinion. It helps open up some people, and they might give a lot of feedback during the post-test questions.

Post-test question examples

- Did I forget to ask you about anything? / Is there anything you’d like to add?

- On a scale from 1 to 10, how easy was the product to use?

- What are the three main things you liked about it?

- What are the three things that confused you or you didn’t like?

- Was there anything you missed?

Don’t forget to thank participants afterward and assure them their feedback proved valuable.

What if things go wrong?

Nothing. Glitches happen. They happen to everyone. It happens because researchers work with different prototypes, recording devices, and software.

Own it up. Tell your participants you need to fix something or deal with an issue, but not at the expense of their time. Always stick to the pre-agreed time. If any glitch causes a major setback, politely ask participants if they have more time or whether you should reschedule.

Don’t panic!

Brace yourself. These examples show what can go wrong in a user test and how to set them right.

- The recording is cut off or not working. Continue the test even if the recording is off. Take notes later.

- The prototype does not work properly. Steer it in the right direction by coming up with different tasks and guide users back to a familiar place. If the prototype doesn’t work, use the time on your hands to conduct an interview. You might discover something important.

- The user looks pretty frustrated and does not follow the “think out loud” protocol. Ask probing questions. Emphasize once again they cannot do anything wrong and help them imagine themselves naturally interacting with the product.

- Comfort the user. Never let the participants feel frustrated. Treat participants like MVPs (most valuable person) even if they may prove difficult. Thank them. Make them feel comfortable. Emphasize you are not testing them, but a product.

Step 4: Analyze & Report

A successful testing session doesn’t mark the finish line. It’s just the start. You need to make sense of all the notes you’ve taken, group the feedback, prioritize it, and report back to your team or your client.

- A quantitative analysis will focus on task success rate, error rate, efficiency metrics and user satisfaction.

- A qualitative analysis will focus on observations, user feedback, and critical incidents analysis.

- Always compile and categorize issues, and rate their severity. Identify root causes whenever possible.

- Summarize key findings and generate insights.

To avoid ending up with an enormous mass of findings, make sure to prioritize the usability issues.

If possible, set up a meeting after a round of tests, so you can help stakeholders understand the results and answer emerging questions. Provide snippets of test recordings, include screenshots and quotes from the participants. It will shift the nature of the usability tests from a mysterious research activity to a tangible method contributing to informed product decisions. It will also help organize the vast amount of feedback, information, and insights from usability testing sessions.

For this, develop your own standards and a system. Distinguishing between usability issues, positive findings, feature requests, and general observations makes a good starting point. There’s no “one size fits all” approach to reporting. It depends on your client or team, how you work together, and the type of usability tests you’re running.

Analysis and reporting usually go hand-in-hand as you typically don’t have time to rewatch everything twice.

What type of usability testing report do you need?

You’ll generally need two or three types of reports.

- 1. Prepare an executive summary with the main findings. You can do it test-to-test, or with compiled data. This is typically shared with stakeholders.

- 2. Share your notes, which should include the following:

- Methodology: participant demographics, tasks performed, testing environment, and tools used.

- Detailed findings for each task or feature tested.

- Usability issues categorized by severity.

- User quotes and observations.

- Video clips or screenshots illustrating key points.

- Recommendations for design changes.

- Appendices with raw data and notes.

This is typically shared with the team working on the prototype.

- 3. Depending on the type of usability tests you did, it may be useful to also create a comparative report, a heuristic evaluation, or A/B testing results.

The usability testing might require five or six iterations and three to four design sprints. It always depends on your product roadmap, product strategy, and how much research you set out to do.

You conduct usability testing to iterate on your product and improve its usability. Always ask how users feel, then the observations might be highly subjective, and the interpretations will be 100% hypothetical. Getting actionable insights from the test comes first, and they should be highlighted in your notes as well.

Research system or simple test reports?

For more extensive projects with weekly usability tests, it might merit building up a research system to synthesize your endless number of observations and insights into a searchable, consistent system.

👉 Pro tip: For a leaner approach, try to include the essentials only, such as task, observation, location (page, step), and issue severity.

A centralized data storage like this helps with searchability, collaboration and long-term data preservation. Standard documentation makes sure that your best practices live on, which makes onboarding easier too. Download our detailed guide for building a research repository.

Key Takeaways

Usability testing gives you data-based insights into user behavior. This is key for getting direct feedback and uncovering usability issues, performance problems, and functionality gaps, so users have an improved experience with your product.

Usability testing is valuable at various stages, from concept formation to product launch. Continuous usability testing throughout the product lifecycle ensures ongoing improvements and helps avoid costly mistakes.

There are various methods of usability testing, including remote versus in-person, moderated versus unmoderated, and quantitative versus qualitative.

The process involves planning the study by defining objectives and methodology, recruiting suitable participants, conducting tests with realistic scenarios, and analyzing and reporting findings.

If you need help with any of this, drop us a line and let’s see what we can cook up together.