What most teams get wrong when choosing an A/B testing solution

Most companies, whether they have a mature data or product organization or not, are already doing some form of A/B testing.

But there are many ways to get it wrong when reaching for an A/B testing solution. In my time as an account manager at an A/B testing tool company, and now at Mixpanel, an analytics platform that integrates with all the best testing tools, the biggest mistake I’ve seen teams make is to try to use an “all-in-one” solution. While this approach might be what some small teams with limited or no resources feel is easiest and most cost-effective, it’s not ideal—and it rarely pays off.

I’ve helped many teams work through their A/B testing needs, set up testing and analytics tools, and refine their testing approaches. It doesn’t take building a giant data stack to get it right. I’ll walk you through some of the learnings I’ve picked up along the way, including:

- Why it’s better to go with purpose-built instead of one-size-fits-all solutions

- How to build a best-in-breed A/B testing stack

- Nuances to keep in mind as you’re vetting solutions

Why a best-in-breed approach is better than tacking on add-on solutions

Some teams will look at the solutions on the market and think, “Why can’t I just use one of those add-on analytics tools?” or “There are tools that say they can do both A/B testing and analytics. Why can’t I just use one of those?”

There are a few reasons you shouldn’t—some of which we’ve covered here. But one good one to highlight is that A/B testing needs to be thorough, and even though it sounds easier to set up one tool instead of two to do it, generalist tools usually don’t give you the customization to reach the level of testing granularity that’s often needed to get clear results.

For example, an add-on A/B solution may let you test front-end elements, but it likely won’t have more sophisticated functionality like optimizing your product recommendation algorithms on the back end. That would require a best-in-breed solution, where you choose the best individual tools for each task.

Equally importantly, it’s unlikely that an all-in-one tool has the same level of rigor behind the product. For example, for a previous A/B testing company I worked at, we had multiple statistics PhDs designing our results engine, and they wrote a white paper with Stanford on the methodology.

Finally, even though some A/B testing tools say they have some analytics built in, a specialized analytics tool will give you significantly more control over how you cut the data and help you unlock a lot more value from the tests you’re running. (More on this in just a bit.)

A small, but mighty, A/B testing stack

Don’t worry, for most companies, the ideal testing stack is not huge—if you can even call it a stack. Ideally, you’ll need only two tools:

- An A/B testing tool

- A robust analytics tool

An A/B testing tool

To run A/B tests, you’ll first need a testing tool. Most teams know to look for the usual things—the option to run A/B tests or multivariate tests, heat maps, session recordings, etc.

There are a ton of tools to choose from, and almost all of them—Optimizely, Apptimize, AB Tasty, and so on—integrate with analytics tools (which might just be a sign that combining two purpose-built tools is the way to go).

Most of their feature sets are pretty similar (almost every tool lets you split traffic, assign variations, and create reports), so which one you choose is really down to the subtle nuances of your team’s specific needs.

Some important considerations when choosing an A/B testing tool:

- User experience: This is the hardest thing to gauge just by looking at a product’s website or demo video. You have to click around a sandbox or demo environment and try it out for yourself to truly get a sense of how easy the tool is to use.

- Testing methodology: Every A/B testing tool has a different testing methodology. Some use Frequentist testing, while others use a Bayesian approach (and even though it seems more and more tools are going with Bayesian, it’s still not perfect—and you should be able to explain this if your team asks).

- Stat sig calculator: Some A/B testing tools come with this built-in, but you can also easily search for one online. Stat sig calculators are important because they tell you roughly how much time you’ll need to run an A/B test, which gives you a ballpark of how much traffic you’ll need. The thing to keep in mind here is if you’re using a calculator that’s not built into your A/B testing tool, its testing methodology needs to line up with your testing tool’s methodology. (If you’re using a Bayesian calculator but your A/B testing tool uses sequential probability ratio testing, you might be left wondering, “Why is my testing tool telling me I need so much more time than my calculator?”)

| Pro tip: Technically, you could also have a feature flagging tool in your stack, but most A/B testing tools can act as feature flagging tools anyway (since “feature flagging” is just turning a feature on and off). |

A robust analytics tool

If you want to enrich the results of your A/B tests and dig deeper into things like cohorts and user flows, you should also have a good analytics tool that integrates with your A/B testing tool.

This will make it much easier for you and your team to unlock deeper insights from your A/B test data (which generally captures the behavior of all users because you want to cast a wide net to reach stat sig). Your analytics tool is what will help you drill down into this data and slice and dice it however you want.

Some important considerations when choosing an analytics tool for A/B testing:

- Ease of cohorting users: Let’s say you want to analyze how an A/B test impacted your power users specifically. Your analytics tool should be able to let you categorize all of your users who were exposed to that A/B test so that you can see how different groups reacted. For example, in Mixpanel you can create cohorts to see the impact of testing variables on groups of users that you care about.

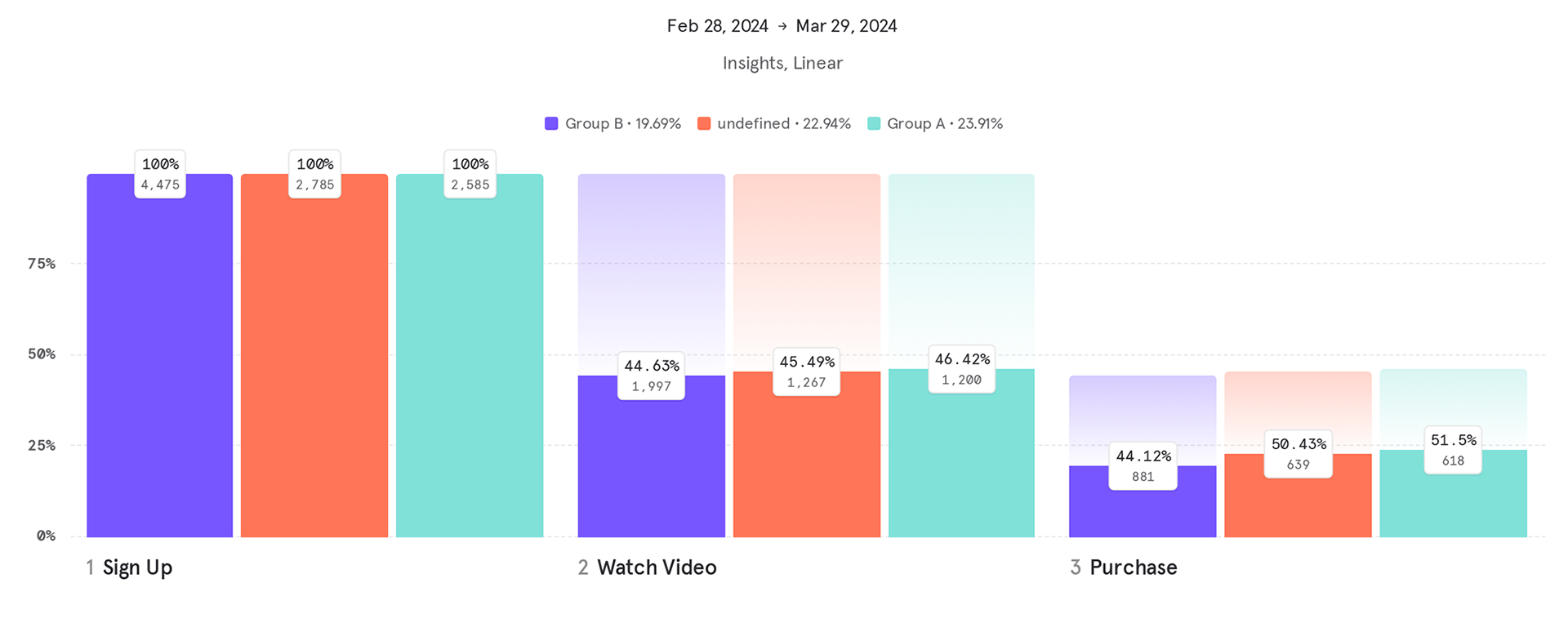

- Ability to enrich other reports and data: Outside of A/B testing, teams are monitoring a host of other data. For example, most businesses are likely keeping an eye on their primary acquisition funnel’s conversions. With an analytics tool, you can go one step further and enrich this pretty significantly with your A/B testing data because you can see which of your users were exposed to different variables, which you can then extrapolate to the impact on overall conversions. Here’s an example of what it can look like in Mixpanel:

- Downstream impact visualization: A good analytics tool will show you not only the results of an A/B test but also how it influences later actions in a user’s journey. (For example, what did users do immediately after signing up? What are the most common actions taken after opening the app?)

| Pro tip: One thing to keep in mind here is that downstream visualization and enrichment of other reports may not be statistically significant (since those are typically smaller slices of the audience that was exposed to the test), but that information can still be used directionally to understand the impact to other core user funnels and behaviors. |

Moving from an all-in-one or suite solution can be simpler than you think

Teams who are used to all-in-one testing solutions may think a testing (short) stack could be too hard to implement or use. Here are some of the most common questions I’ve addressed around this:

- Can our teams support the level of effort required to set up these tools? For example, will you need engineers, or is the tool user-friendly enough that your PMs can use it? The best stand-alone tools today, including Mixpanel, tend to offer implementation options that require little or no engineering resources, so this rarely needs to be a concern.

- What integrations will I need to worry about? Ideally, your tools will integrate with other tools in your stack, but at a minimum, your A/B testing tool and analytics tool should integrate with each other. Mixpanel and our integration partners (including all the A/B testing tools mentioned earlier) embrace the data tools ecosystem approach and are always adding as many new tool connections as we can. While you only need testing and analytics to get up and running with useful A/B testing insights, there are many solutions in today’s data tool ecosystem to sync with your test data and help your teams build and operate better and smarter.

- Will I need technical experience to interpret the data and results from a testing stack? Getting testing and analytics in the same suite of software can mean they are easy to use together (and that winds up being true some of the time). But the best data tools today focus not only on integrations but also self-service features that make it possible for non-technical team members to run and analyze tests just as easily as technical members. Mixpanel shines here, as do so many of our best A/B testing tech partners.

What it looks like when you choose the best tools for the job

A good A/B testing stack will look slightly different for every organization and should take into account your team’s specific needs, skills, and bandwidth.

While it might be tempting to go with an all-in-one tool, you’ll likely be missing out on granular ways to cut data, segmenting user groups, and the analytical rigor that purpose-built A/B testing and analytics tools come with.

If you’re interested in seeing how businesses like DocuSign and Olo are using Mixpanel to increase conversion rates and cut down on data analysis time, try it for free!