Contents

In today's AI-driven world, the excitement about artificial intelligence is widespread, with numerous tools available to shape our lives and the world. But with so many options flooding the market, it's easy to feel overwhelmed. Our blog post guides you through the maze of AI tools. We'll uncover the hurdles of current AI-powered research tools and spotlight the most promising ones to keep an eye on.

Overall, our expectation of AI is clear: to tackle our work’s tedious and monotonous aspects. Let’s imagine, for example, that AI would relieve us of the tiring task of transcribing hours of interview recordings or that it would sift through massive data sets and generate insights and visualizations within seconds. The dream scenario: AI frees us from repetitive tasks and allows us to focus on what’s really important – innovation and creativity.

But the question arises: How useful are the currently available tools, and what challenges do they generate? We delved into this topic at the studio to understand the current state clearly.

How we approached AI research tools

Our mission was to investigate the reliability of current design and research tools thoroughly.

We formulated a dedicated team consisting of three researchers and three designers. While some team members immersed themselves in articles and courses, others extensively tested AI research and design tools within the given timeframe. We filtered through numerous tools to identify the most promising ones. Then, we rigorously tested AI tools for UX research to evaluate their suitability for future integration and understand their limitations.

Read on to get a sneak peek at our research team’s conclusions.

Please note that we only focus on AI (assisted) research tools in the following section. There are many types of AI tools, they know different things, and they are trained differently. This blog post is not about AI tools in general but specifically about UX research tools.

5 points to keep in mind when working with AI research tools

While AI tools provide various functions, it’s crucial to acknowledge their constraints. Although some speculate they will eventually replace human work, mirroring human cognition, our experience shows that this isn’t happening yet. 😉

To leverage the potential of AI tools in the research process, there are some key points to keep in mind when using such tools.

1.Double-check the output of AI tools

Based on our experience, we strongly advise double-checking the output of AI tools for several reasons: AI’s lack of contextual awareness, its potential for varying weight assignments to information, its reliance primarily on textual data, and its tendency to provide overly general responses.

- AI is not aware of context

AI may struggle to identify the information that truly matters because it can’t grasp the broader context of the project. The tools we tried out did not enable us to clarify the objectives and research goals, which sometimes resulted in irrelevant outputs.

Example: We uploaded a user interview transcript into a tool that creates summaries and analyses, turning qualitative data into insights. At one point, the participant went a bit off-topic, that was not strongly connected to the main research objective but was still interesting. Since the tool was not aware of the research objective, it highlighted this part as one of the most important insights. The tool let us edit the output easily, but this situation highlighted the need to carefully review automatically generated insights.

- AI might analyze the information differently

Attention mechanisms allow AI models to weigh between information, typically by assigning greater importance to more frequently mentioned elements.Example: The AI tool tested generated the transcript and extracted the most important statements and findings based on the recording of a usability test. The tool identified the search function as the biggest issue. The plain text (transcript) did indeed suggest this pattern since the word “search” and how it didn’t work as expected was mentioned several times. However, as a researcher observing the whole session, I could easily see that the participant had far more difficulties uploading a document. Although it was mentioned only once or twice, its severity compared to the search was clear, which the AI tool couldn’t necessarily assess.

- Typically relies on textual data

AI research tools rely on textual information and thus struggle to capture the full context of user behavior. They can miss subtle nuances or specific user contexts that human researchers can intuitively understand. Their limitation mainly lies in their inability to effectively combine and process non-textual information (e.g., tone of voice, time on task).Example: children usually agree with almost everything an adult says in a test session. They also tend to say positive things about the product and features. But their faces are like mirrors, revealing everything. An AI system processing the video transcript of a usability test with children concluded that the product is “likable, easy to use, and appealing.” But while watching the session, the children’s faces and non-verbal cues made it clear to us that there were struggles.

- AI tends to provide general answers

AI tools provide generalized answers because they are trained on large data sets to capture different contexts. While this enables them to offer generalized answers efficiently, they may lack the detailed understanding (nuanced specificity) that human experience and contextual knowledge can offer.Example: Creating personas using AI would make researchers’ lives much easier. The idea of generating content and connected visuals sounds amazing and time-saving. However, our experience with AI-based tools revealed a common drawback: the generated content often lacked specificity. For instance, when creating a B2C persona for engagement ring buyers, the AI output was generally correct but couldn’t provide nuanced insights. It overlooked the sentimental value of the process (e.g., the fears that the partner won’t like the ring or will say ‘no’). While the tool allowed manual editing, refining the persona took almost as much time as creating it without AI.

✨💡Tip: AI research tools offer a strong foundation but often rely on single input sources, usually text. Remember that qualitative data analysis is complex, requiring a holistic view, including implicit meanings and nonverbal cues. So use AI smart. Check the generated output, and add your own point of view to it.

2. Count with the limited creativity

AI tools are good at processing information within the parameters of their training datasets, efficiently analyzing patterns. However, their strength lies in complementing human creativity rather than replacing it, as they may not generate truly innovative or out-of-the-box ideas.

Example: On a project, we needed some out-of-the-box ideas on how to proceed with research to show its value to our client, who believed they already understood their target group and market completely. We turned to the internet for ideas about how to approach the situation – read blog posts and articles and also tried out AI tools. However, both Google and AI provided similar approaches, which, while not bad, lacked the unconventional approach we really needed. In the end, it was the collaborative brainstorming sessions with my colleagues that provided the innovative solutions we needed. AI may offer valuable input, but it’s our team’s creativity and diverse perspectives that truly shine in problem-solving.

✨💡Tip: if you need a creative idea or innovation, an output generated by an AI tool can be a good starting point, but never be satisfied with it! Keep thinking about the output you received and discuss it with your colleagues!

3. Be aware of AI hallucinations

AI hallucination occurs when artificial intelligence produces inaccurate or nonsensical outputs. This often results from biases in the training data or the AI model’s contextual comprehension limitations.

Example: I asked a question from a popular AI tool and got an answer that was a bit strange at first glance, so I asked it to give me sources for the provided information. I started to check the references, but 2 out of three did not exist.

✨💡Tip: critically evaluate the output and consider the context in which it was generated. Also, try to verify the outcome with other sources or references.

4. Make informed choices when it comes to AI research tools

While we know that marketing texts can often be misleading, this seems to be especially true around AI currently.

The word “AI” attracts attention and makes people believe that something that is AI should be better than something without AI. Consequently, based on our experience, many tools on the market emphasize their AI features. But, once you try them, you realize that they provide the same as before the AI-hype; they just added the word AI somewhere on their platform.

✨💡Tips: Numerous companies use the word ‘AI’ to boost user numbers without adding actual value. Before trying a new AI feature or tool, check its reviews, research the company, and look for information on the AI mechanisms used and how they are integrated.

5. Consider and treat AI as a junior research assistant

Although artificial intelligence is very different from human intelligence, and they do not have a human-like nature (yet), for example, they lack emotions, abstract thinking, and creativity. In an important aspect, they are very similar to humans: They are not infallible, they can make mistakes!

They have a lot of knowledge but less experience in applying it to new situations. Just like a junior assistant who is very talented and hardworking but hasn’t yet had the opportunity to put together the small pieces she has learned so far.

Like the assistant requires time to accumulate experience, AI also requires time to improve.

Until it happens, we can use their vast knowledge effectively, but we must be actively involved in the process and carefully examine what they do.

Which AI tools do we recommend you try out?

Despite all the limitations, there are tools based on our experience that can effectively help research processes.

As mentioned before, use every AI tool as an assistant who does its best, but without sufficient experience, his performance is limited. Still, they can save a lot of time and give you opinions, overviews, summaries, and ideas to work on further. But always remember to double-check the output they generate.

Here’s a short list of tools we recommend you try!

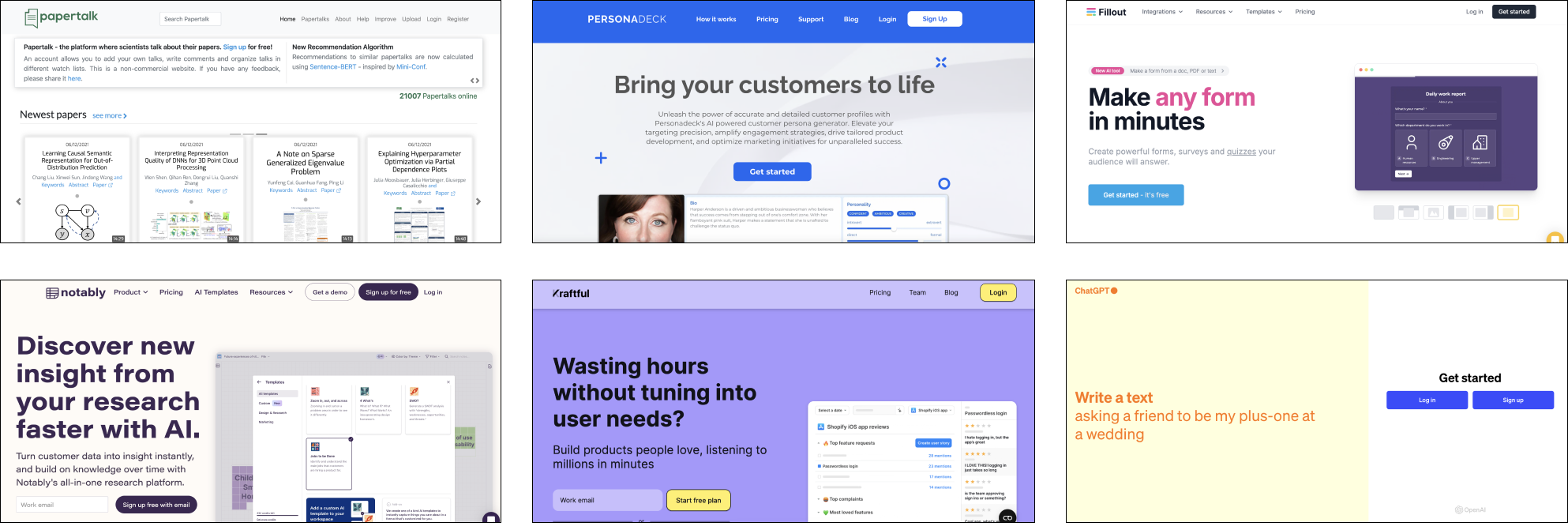

Papertalk – for discovery and desk research

- What can it do for you?

– Summarising papers and documents and extracting their key points.

– Generating actionable insights.

– Organizing the papers and allowing you to find what you need easily.

- What to pay attention to?

– The chatbot feature seems nice, but it answers in a very generic way that is not that useful in many cases.

– Sometimes, it gives a very short summary, and unfortunately, it is not editable.

Personadeck for persona creation

- What can it do for you?

– Creating personas with AI.

– It puts the persona in a simple but well-structured template that can be edited and fine-tuned easily.

– They promise that B2B personas are coming soon.

- What to pay attention to?

– The output is a bit too generic in itself, for sure, it needs some fine-tuning.

– It has some usability issues, e.g., creating multiple personas or modifying the prompt is not as easy as it could be.

Fillout for quantitative research

- What can it do for you?

– Creating a form/survey based on the topic you provide. It can be auto-generated or created based on templates.

– Checking the result with a data analytics dashboard.

– It has integrations for various tools.

- What to pay attention to?

– Having suggestions would have been a nice extra with which AI could help more to have the opportunity to select from different options.

Notably for qualitative research

- What can it do for you?

– Analyzing and summarising research materials (transcriptions, documents).

– It also lets you choose a template to put this content in a format of your choice.

– Creating transcripts for uploaded video recordings.

- What to pay attention to?

– AI-generated insights will appear on a Miro board with sticky notes, but the sticky notes are not organized. You have to edit it manually to get a presentable output from it.

Kraftful for qualitative and quantitative research

- What can it do for you?

– Transforming qualitative data into insights.

– Additionally, it lets you browse through and ask questions about the insights in the AI-powered search bar.

– Build surveys (auto-generate them or let you use templates).

- What to pay attention to?

– Visual parts were missing from the product (e.g., the possibility of creating a journey, a flowchart, or data visualization) that could make the result more presentable.

– Chat functionality was a nice-to-have, but it was limited and gave repetitive answers.

+1 ChatGpt or Copilot as a source of ideas and inspiration

- While ChatGpt or Copilot are not dedicated UX research tools, both can be useful to get a general overview of a topic. Even if they do not provide you with unique answers, they usually give a comprehensive output from which you can select what you think is beneficial and useful. They can also serve as effective starting points for further brainstorming.

What about the future?

Although AI technology is advancing, it has yet to reach the level of human cognition and understanding. We do not know how long it will take for it to overcome this challenge, BUT

We believe that collaboration between humans and AI will be the driving force behind successful research. For this collaboration (between AI and human researchers) to really lead to the best results in the future, we need to constantly observe and monitor the evolution and capabilities of AI research tools.

By using them, it will become clear what kind of tool we actually need and where we can harness the power of AI in the research process. Therefore, it’s also in our interest to explore these tools. Without researchers, AI research tools will never be able to meet our needs.

After taking our first steps with AI research tools, more AI experiments will follow.

What has been your journey with AI research tools?

Want to learn more?

If you want to read more about AI and UX design, UX research, and our experiences, make sure to check out our articles and related case studies.

Do you need help with designing AI interfaces? Book a consultation with us. We will walk you through our design processes and suggest the next steps!