Is the process of prioritizing features and initiatives a battle or a pleasure?

Don’t answer. I know…

The Problem

The prioritization process is usually based upon opinion, customer anecdotes, or the loudest voice in the room or, instead, upon “objective” criteria which flux according to opinion, customer anecdotes, and loud voices.

The Solution

When you establish a fixed methodology for prioritization with buy-in from all stakeholders, argumentation gives way to discussion and quantification. The question is: which prioritization system is right for us? Allow me to detail some alternatives.

Prioritization Systems

You need a prioritization methodology to rank the potential initiatives or products in which you will invest (or not) and, at a more granular level, to rank the features planned for a product. For purposes of this article, I’ll focus primarily on the latter case- feature prioritization.

I put prioritization schemes into three, broad categories:

Standard Criteria Models

These utilize the same evaluative criteria regardless of what company is employing the scheme. With these systems a priority number is calculated for each feature according to those criteria. Examples I discuss include RICE and WSJF.

Classification Schemes

Though they are often identified as such, these are not actually prioritization models per se. However, they do offer useful ways of categorizing features. Here I’ll discuss MoSCoW and KANO.

Custom Criteria Models

This approach allows your organization to establish a customized set of stable criteria that suit the organization’s particular product category, market, business priorities, company culture, or other factors. As with Standard Criteria models, a priority number is calculated for each feature. Spoiler- this is the one I like best.

Standard Criteria Models

RICE

RICE is favored by many product teams for its simplicity. It assesses the customer/market value of the feature, then qualifies that value using a realistic idea of its development cost. For each feature, numbers are assigned to each criteria with the priority score of the feature determined by this formula:

(Reach X Impact X Confidence) / Effort

Reach (How many will this impact) This could be measured as customers per quarter, or transactions per month, or another measure just so long as the same measure is used for all features.

Impact (The level of impact the feature is expected to have for its intended purpose) Rated on a 1-5 scale. Whether the feature is designed to increase adoption, speed workflow, simplify product use, add a use case to the product, or another purpose, the rating signifies how well we expect it to accomplish that.

Confidence (How confident are we in our estimates of cost and value) Rated 0-100%. This criterion helps tamp-down our natural enthusiasm for exciting concepts. If we are not very confident in our assumptions, the priority rating goes down.

Effort (Your current estimate of cost) This can be a monetary value, labor hours, or early-on even t-shirt sizes. However, you must use the same measure across all features being prioritized.

WSJF

Weighted Shortest Job First is similar to RICE but adds a time factor to its calculation. Many teams using Agile software development favor this approach because it pushes the more time critical features forward in the development backlog.

Like RICE, numbers are assigned to each criteria with the priority score of the feature determined by a formula:

(User-Business Value + Time Criticality + Risk Reduction or Opportunity Enablement) / Job Duration

Another advantage of WSJF is that it lets you define the specific meanings of those standard criteria to best suit your business. Here are those criteria:

User-Business Value (Combines your assessment of value to customers and to your business) Rated on 1-10 scale. As mentioned, your team gets to define just what lower or higher scores indicate for your business. For example, you might specify that a rating of 1-3 means that: few customers will be impacted by the feature, that it offers only a moderate value proposition, and that it only provides incremental value to your business. By contrast you might specify that a rating of 8-10 means that: the feature will impact most of your customers, delivering high value that is tied to key business strategies.

The tuning of what each criteria means lets the model better accommodate the particular contexts of your business. Discuss with all the relevant stakeholders, get consensus, and make a reference chart of these rating definitions.

Time Criticality (Boils up your assessment of factors like the existence of fixed deadlines, customer urgency, current impacts, etc.) Rated on a 1-10 scale. Here you might specify that a low score means the feature is not urgent, while a high score indicates that it must be completed ASAP (e.g.: prompted by regulatory requirements)

Risk Reduction or Opportunity Enablement (Rather than assessing direct customer or business impact, this factor represents your evaluation of how future risk and opportunity are impacted.) Again, this is rated on a 1-10 scale. You might specify that a low score indicates that the potential opportunity impacted is vague at best, while a high score signifies that it will very positively impact well-defined opportunities or risks.

Job Duration (How long will it take) This too is rated on a 1-10 scale, so it is important to be clear about the range of duration you want this rating to represent. For example, you might select weeks, or perhaps sprint cycles as your measure of duration. For clarity, you must specify how many duration units are represented at each level of the 1-10 scale.

When you do the math, those features with higher User-Business Value, Time Criticality, and Risk Reduction/Opportunity Enablement that also require less time (Job Duration) will score highest. The system prioritizes the more important features that you can bring to market more quickly. Hence the name: Weighted, Shortest Job First.

NOTE: Both of these standard criteria schemes use cost as the denominator in the formula. In this way they risk elevating cheap features to the top of the priority list despite the low customer or business value those features might actually offer. Something to keep in mind when choosing a prioritization approach.

Classification Schemes

I’d like to touch on a couple, popular “prioritization schemes” that are not actually prioritization schemes. These serve to classify features instead, but those classifications can be useful in the prioritization process.

MoSCoW

In the case of MoSCoW, you simply categorize features into one of a four buckets based upon your assessment of those feature concepts. As with WSJF, you must first specify and agree what each of these buckets means. Then start bucketing:

Must have (These are things that are necessary to a functioning product and thus not debatable.)

Should have (These things add value for customers and/or the business, so you’d really like to have as many of these in the product as possible.)

Could have (You can think of these as things what would be nice-to-have. They add value but not at the level of the Should haves.)

Won’t have (This is not an assessment of value, but rather, a way of identifying those things that will not be included in the release being planned for any number of reasons.) “Won’t have” serves to clarify this to all and to prevent scope creep. But these features will likely surface again in planning around future releases.

Again, MoSCoW is a classification scheme that produces no prioritization ranking. So, very likely, the features designated as Should haves and Could haves will next need to be put through a separate prioritization scheme to rank those things.

KANO

The core of the KANO model is its classification scheme which, similar to MoSCoW, buckets features into categories. However, KANO also offers an analytical, survey methodology that can be used to directly collect customer sentiment. Of course, this offers only the customer’s perspective, not your relevant business goals and parameters, thus KANO can’t serve as your sole prioritization scheme any more than can MoSCoW.

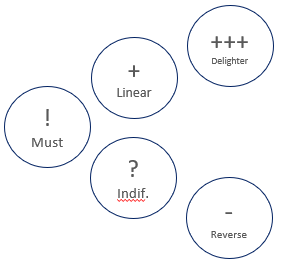

The category buckets used by KANO include:

Must haves (Those things without which you really don’t have a product.) All must be included in the product.

Linear (Those things which we know customers want from our discussions with them.) The more of these, the better.

Delighters (Things that we believe will surprise and delight customers with unexpected value.) These are few and far between. But when you have one in hand it’s worth great sacrifice and cost to get it into the product because these are the capabilities that are most differentiating and that create the most buzz with customers.

Indifferent (Those things about which customers don’t care much.) Nuf said.

Reverse (These are product features or attributes that actually diminish the customer’s assessment of the product.) This is a useful category because it lets us identify things that are not simply ranked low, but which actually hurt the value of the product in the customer’s eye. Sometimes these turn out to be the brilliant features we’ve invented and are passionate about. Nevertheless, they have to go.

KANO’s buckets can be used as such to categorize planned product capabilities, but like MoSCoW, the Linear features will still need a secondary prioritization to establish rank.

About that KANO survey methodology… I’ve found it difficult for customers to get through a KANO survey because it asks customers to rate each feature by selecting from a set of brain-twisting options:

I LIKE to have this capability

I LIKE NOT to have this capability

I EXPECT to have this capability

I EXPECT NOT to have this capability

I am NEUTRAL

I can LIVE with this capability

I can LIVE WITHOUT this capability

I DISLIKE having this capability

I DISLIKE NOT having this capability

It’s taxing for customers to work through this. But regardless of whether or not you use the survey approach, the KANO classifications can be valuable when considering product priorities purely from the customer’s standpoint.

Custom Criteria Model

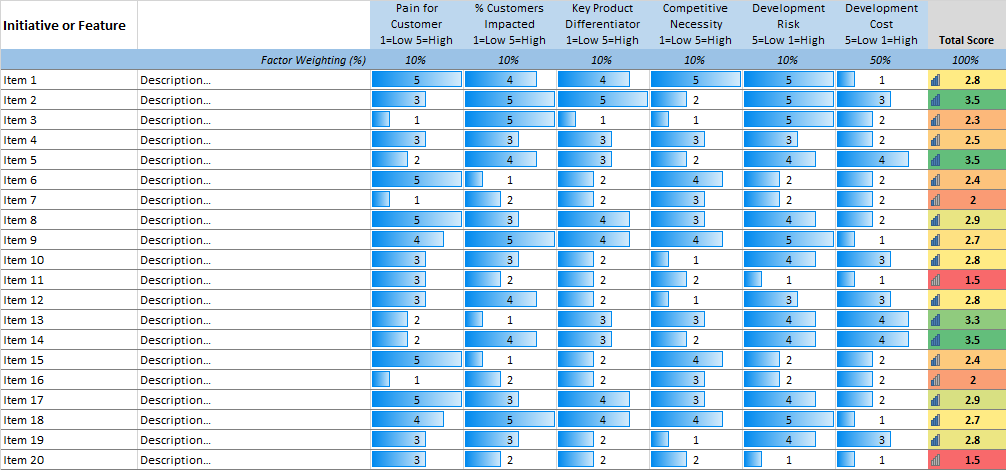

Which brings me to my favorite prioritization methodology. I like this one because you decide just which criteria best suit your organization’s particular product category, market, business priorities, company culture, and other factors. Here’s an example matrix.

Like the standard criteria methods, you assign ratings to a set of criteria to arrive at a score for each feature. But you pick the criteria to be used and, since not all criteria are equally important, you may also weight the value of each criteria relative to each other.

Importantly, implementing a custom criteria matrix requires a significant set-up step. You must reach consensus with all the relevant stakeholders concerning which criteria are to be used in the scheme. Those stakeholders (Product, Marketing, Engineering, Sales, Finance, Business, other) will each bring their own perspective and priorities to the discussion. These discussions can be quite animated because opinions, anecdotes, and loud voices will appear. But in the end, consensus can be reached concerning the most valuable criteria to use, and with that, half the battle is won.

Part of the beauty of this system is that the criteria options are virtually unlimited, allowing the organization to reach consensus on a set of factors well-tuned to their context. Yet, consensus does not mean that we include every criteria that anyone wanted. Typically, if more than a half-dozen criteria are employed the subsequent feature rating process becomes too complicated to manage. So, the process for selecting criteria must be a very selective process, which means employing everyone’s best critical thinking in a thoughtful discussion.

Here are some criteria options to choose from that have proven valuable:

Of course, these are not the only choices. Create whatever criteria best suit your business. Note that, as with WSJF, you need to specify just what the rating scale is measuring and what each rating means (as shown above.)

Some teams like to include a column in the matrix for the exact estimated cost of each item, then use this as a denominator for the sum of all the other criteria. Like the RICE and WSJF models, this puts cost in a dominant role for prioritization. Particularly when resources are very constrained this may be appropriate. However, as previously noted, this approach can artificially elevate low priority features simply because they are cheap to execute. A release that contains loads of cheap but low value items is not one that customers will appreciate nor will differentiate you from competition.

Conclusion

In the end, the prioritization methodology you utilize is a choice that all the relevant stakeholders need to buy into because you all need to stick with what you’ve picked for a long time. It’s well worth enduring all the opinions, anecdotes, and loud voices while you deliberate which methodology is right for your organization because that methodology will be the very mechanism to quiet those things ongoing.

Next

Want the Excel of the Custom Criteria Model and sample criteria list? Contact me

Share this article with someone who might be interested

Comment about what’s so wonderful about this article (or what is not- if you must)